OpenAI's Whisper Is So Good It Can Transcribe Any Song's Lyrics

Though a quick Google search confirms that the first attempts at having computers identify and extract spoken language date back to the early 1950s, voice recognition technology has only recently been made accessible to the general public. Like most of my friends, I own a small Alexa device at home. And like them, I never use it.

I was yet very excited when I discovered about OpenAI’s Whisper on Hacker News a little while ago. If the name OpenAI sounds vaguely familiar to you, that’s most likely because these guys are behind ChatGPT. Lex Fridman recently interviewed Sam Altman, the company’s CEO, and their conversation is really interesting if you’re into state-of-the-art AI technology.

Whisper is a whole separate project though, best described on its official GitHub page as follows:

“Whisper is a general-purpose speech recognition model. It is trained on a large dataset of diverse audio and is also a multitasking model that can perform multilingual speech recognition, speech translation, and language identification.”

The whole page is really well-documented, with tons of useful examples and illustrations. Besides, if you’re curious and want to know more, I highly recommend to take a closer look at the following resources:

- The official blog entry on OpenAI’s website

- The research paper for the model behind Whisper, entitled Robust Speech Recognition via Large-Scale Weak Supervision and submitted in December 2022

That being said, what we’re going to do today is actually much more simple. We’ll try and see how we can identify and extract human speech from a couple of videos that we think can represent a challenge to a speech recognition model. Please note that this article is going to be slightly shorter than most of the content that I have recently published on this website.

The Sound of Music

Though this might sound obvious, the first step that we need to take is to find some audio files. Actually, let’s be a bit more specific: if we want to see how accurately Whisper is able to identify and transcribe different types of human voices, we should take a three-step approach and gradually increase the complexity of the audio files that we’ll be feeding to our model:

- We should probably start with some very clear and distinct human voice, without any background noise or anything that could make speech recognition more difficult than it should be. I’m thinking of an interview, a speech, or something along those lines

- Then a voice again, but this time with some sound effects in the background.

- Finally, a song, with multiple instruments in the background, etc..

That looks promising. But you might be wondering, where and how are we going to get these audio files?

Well, we could record ourselves while singing, or rip a couple of songs off some old laser discs, etc.. Or we could go the dodgy way. By dodgy I mean, why don’t we just use the world’s largest video sharing platform and extract the audio track for some of its videos?

To do this, we’ll be using PyTube, a set of tools for playing around with YouTube videos, that’s been around since 2014. This library is of course completely illegal non-official, and it goes without saying that we shouldn’t be downloading content from Youtube or from any video or music streaming platform in general. I will be deleting the files just after downloading them, and I do not support or encourage piracy in any shape or form.

With this out of the way, let’s see how we can start extracting some content. Our first video is again an interview between Lex Fridman and the legendary Brian Kernighan this time:

We can write a simple function that captures the audio stream from the video and returns an error if anything goes wrong (which has happened a couple of times as I was writing this article):

from pytube import YouTube

def getVideo(video):

yt = YouTube(video)

streams = yt.streams.filter(

only_audio=True,

abr="128kbps"

)

try:

streams.first().download()

except Exception as e:

print(e)

getVideo("https://www.youtube.com/watch?v=Djcy40bTgP4")

Everything worked according to our plan, and PyTube has successfully saved the audio track as an mp4 file on our local drive!

Awesome. All we need to do now is find two more videos that match the selection criterias that we defined earlier, reuse our getVideo() function, and try to see whether the model has done a great job or not. Rinse, repeat.

Whisper 101

So how do we get Whisper to work? As in, how do we feed an audio file to our recognition model and get it to identify the presence of human speech before returning some text for us? Let’s install the library first:

pip install -U openai-whisper

The folks at OpenAI are incredibly talented, and working with any of their products is in general really simple. Whisper’s default parameters seem to do a fairly decent job, and we’re therefore going to stick to the base model. Though it might not perform as well as its medium counterpart, it however only requires approximately 1GB of VRAM which is perfect for working from Google Colab.

import whisper

from pprint import pprint

def getAudioToText(video):

model = whisper.load_model("base")

result = model.transcribe(video)

return result

text = getAudioToText("Learning New Programming Languages Brian Kernighan and Lex Fridman.mp4")

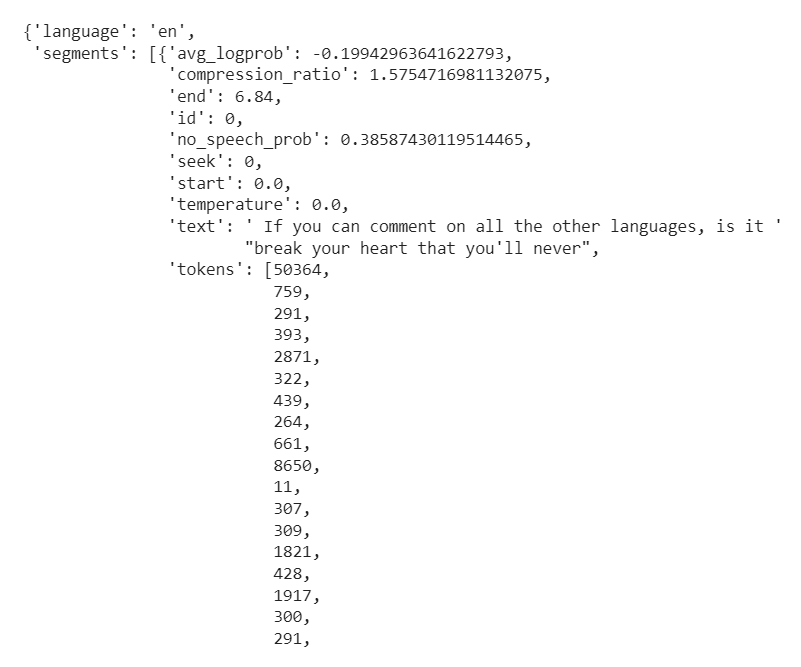

Whisper’s .transcribe() method returns a pretty lengthy dictionary, that we can partially try to capture using the pprint library:

from pprint import pprint

pprint(text)

There’s arguably a lot of information in this dictionary, including the transcript of our audio file. But before we evaluate whether or not the model has done a good job at catching Kernighan’s thoughts on programming languages, let’s see what other useful information can be found within our aforementioned text dictionary:

text["language"]: displays the main language of the human voice(s) that the model has identifiedtext["segments]: when processing the audio files it gets fed, Whisper breaks down the audio stream into segments. Each segment has a precise start and end timestamp, a number of identified tokens, and a bunch of other stufftext["segments"]["no_speech_prob"]: Whisper is able to assess whether each segment contains some human voice or not. This is going to be particularly useful when we feed some songs to our modeltext["text]: the full transcript for the audio file

My notebook environment (Google Colab) tends to mess up when I try to print long strings, so I had to write a quick and dirty function to render the output of our getAudioToText() function. But if you’re running your code from a proper IDE, running print(text["text"]) should do the job:

def getText(data):

tokens = data.split(" ")

x = 0

while x <= len(tokens):

print(" ".join(tokens[x:x+10]))

x += 10

getText(text["text"])

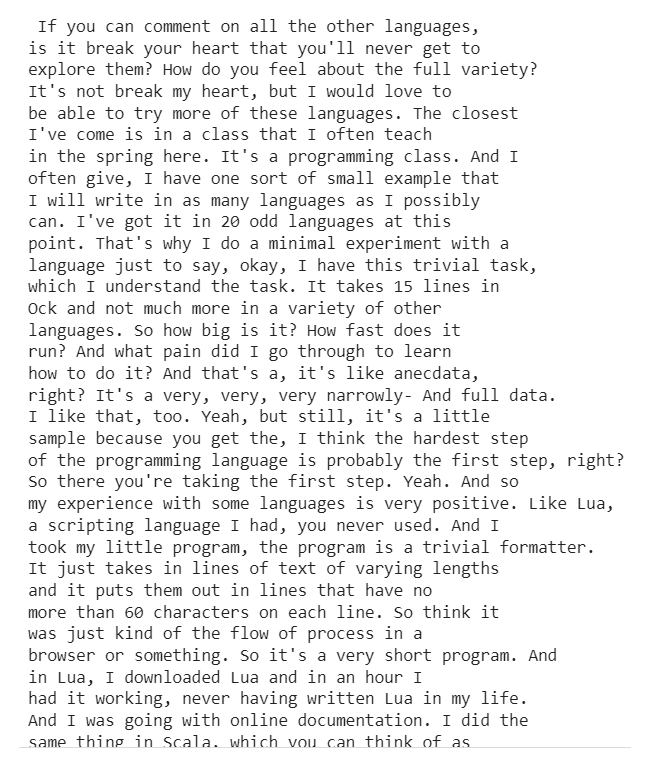

This is seriously impressive, and I’m pretty sure that extracting human speech from a slightly more complex video is going to work just as fine.

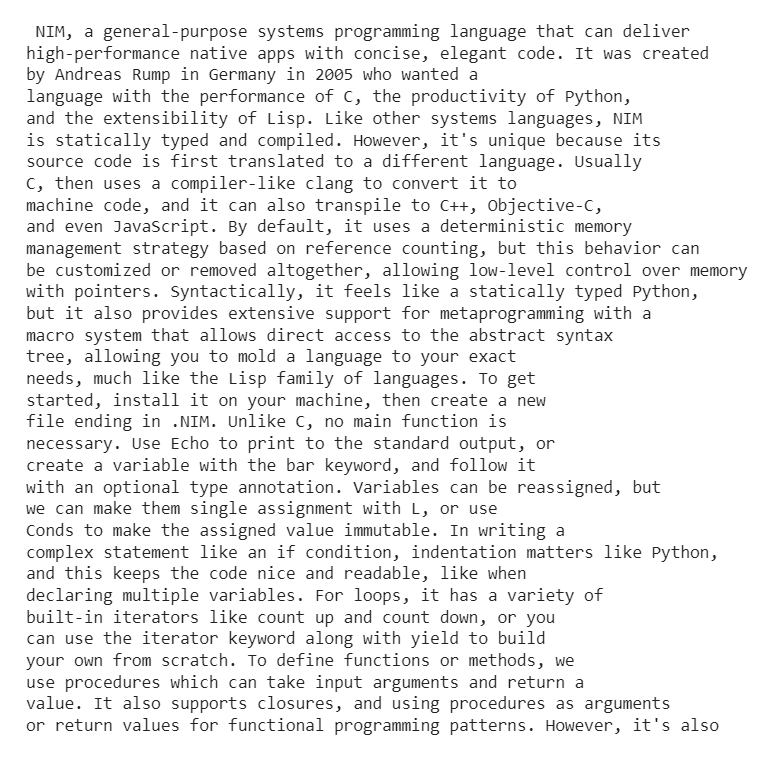

Whisper in 100 seconds

You’ve probably heard of Fireship, right? Not only is it one of my favourite YouTube channels, but I also think it’d be a perfect fit for our second round of testing. First, its owner, Jeff, speaks really fast. Besides, there is some very low-volume music in the background, as well as some minor sound effects. We’re going to try and see if Whisper can accurately transcribe the following video:

The reason why I picked Nim in 100 seconds is because it gives a great example of the high-quality content that this channel constantly delivers, and also because I’m a big fan of the Nim programming language. I wish I could spend more time working with it, and I’d love to see it get the attention it deserves.

Is this slightly more complex audio stream going to be a challenge for our model? Let’s find out!

getVideo("https://www.youtube.com/watch?v=WHyOHQ_GkNo")

text = getAudioToText("Nim in 100 Seconds.mp4")

getText(text["text"])

And there we are, same impressive results as with Lex Fridman’s video!

Careful Whisper

So far so good! We still have one final test to run. How are we going to pick our song though? Well let’s see what a quick search for the word “whisper” returns:

Now dear readers, we are OF COURSE going to try that song! I can’t believe I didn’t think about that song before! All we have to do is reuse our getVideo() and getAudioToText() functions one last time:

getVideo("https://www.youtube.com/watch?v=izGwDsrQ1eQ")

text = getAudioToText("Careless Whisper (Official Video).mp4")

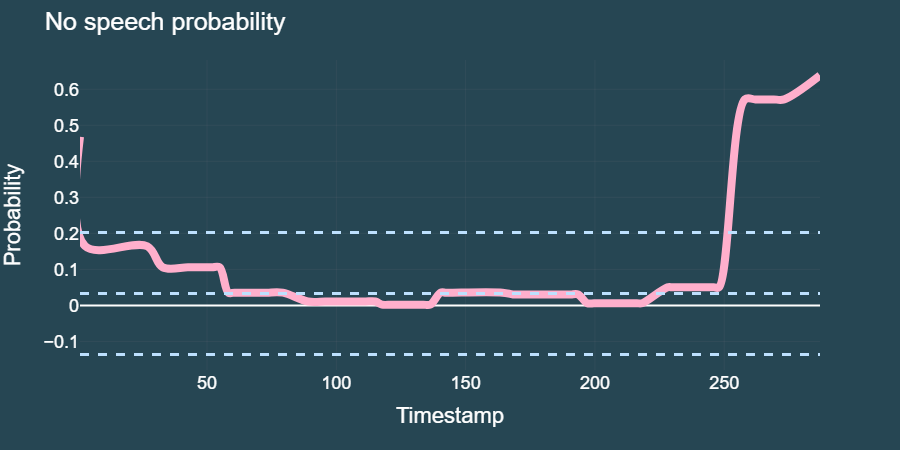

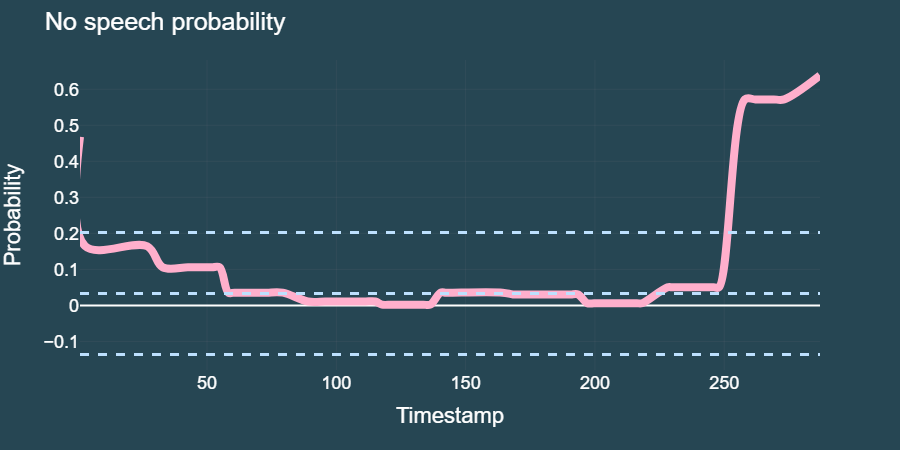

But wait! Remember earlier when I praised the folks at OpenAI for shipping a library that’s both performant and easy-to-use? We also briefly discussed the fact that Whisper was able to assess whether each segment contains some human voice or not. Well, why don’t we try to plot these values!

A line chart will probably do the trick, with the timestamps as the x-axis values and the ["no_speech_prob"] values as the y-axis. We might also want to add some statistical elements to our plot: like for instance some upper and lower thresholds based on a combination or mean and standard deviation values. Doing so using Matplotlib or Seaborn should be pretty easy.

However, I like my plots interactive, and over-complicated. So what we’re going to do instead, is do in 50 lines of code what we could have done in just 5 by using Plotly and add a lot of optional and fancy parameters.

import plotly.express as px

import statistics as stats

def getSpeechPlot(audio):

x = [a["start"] for a in audio["segments"]]

y = [a["no_speech_prob"] for a in audio["segments"]]

m = stats.median(y)

s = stats.stdev(y)

med = [m for _ in range(0,len(y))]

up_stdev = [(m+s) for _ in range(0,len(y))]

low_stdev = [(m-s) for _ in range(0,len(y))]

fig = px.line(

x=x,

y=y,

line_shape="spline",

color_discrete_sequence=["#ffafcc"]

)

for line in [med,up_stdev,low_stdev]:

fig.add_scatter(

x=x,

y=line,

mode="lines",

opacity=1,

line=dict(

color="#bde0fe",

width=3,

dash="dash"

)

)

fig.update_layout(

title="No speech probability",

xaxis_title="Timestamp",

yaxis_title="Probability",

bargap=0.01,

showlegend=False,

#template="seaborn",

plot_bgcolor="#264653",

paper_bgcolor="#264653",

width=900,

height=450,

font=dict(

family="Arial",

size=18,

color="white"

)

)

fig.update_xaxes(

showgrid=True,

gridwidth=0.1,

gridcolor="#9a8c98"

)

fig.update_yaxes(

showgrid=True,

gridwidth=0.1,

gridcolor="#9a8c98"

)

fig["data"][0]["line"]["width"]=8

fig.show()

getSpeechPlot(text)

Alright, the above chart is quite interesting, and looks pretty accurate to me. I just listened to the whole song again, and can confirm two things:

- guilty feet have got no rhythm

- both the opening and end of the song don’t contain much singing. A saxophone (I think?) is the main instrument that can be heard

So once again, not bad! Here’s how we accessed the timestamps and corresponding values from the text dictionary that our model returned: we looped through the values contained in ["segments"], and used deeper the values contained in ["start"] to plot our x-axis and those in ["no_speech_prob"] for the y-axis.

All that’s left for us to do at this point, is check whether or not Whisper did a good job at capturing the actual lyrics for the song:

getText(text["text"])

Pretty cool eh!

The hoarse Whisperer

Sure, Whisper isn’t exactly perfect. I tried to experiment with a few metal bands, such as Cradle of Filth for instance, but the model clearly struggled to grasp what Dani Filth was saying. Now to be fair, so do I.

Am I an expert in the field of speech recognition? Absolutely not. And yet I feel like we’ve had some great fun testing out some of the core functionalities that Whisper provides. At my very modest level, I can already think of a ton of applications for this type of model.