Exploring String Distances With TypeScript and Talisman

An example of what we’ll be doing in this article

Most of the articles I write for this website are inspired by problems that I come across at work.

Seeing large influx of spammy, and most likely automatically generated user content is pretty common. And to be fair, most social media platforms have become quite good at catching that type of content before it can even start causing harm to their userbase.

Without going into too much detail as to what the particular type of issue we had to deal with was, let’s simply say that we decided to look for ways to identify and group character strings (like user names or email addresses) that “seemed” similar, and probably mapped to the same group of individuals.

We are using Apache Presto, a SQL query engine whose popularity has grown exponentially over the past couple of years. It’s both fast and reliable. Most importantly if you’re on the querying side of things, it provides a massive set of functions and operators that make most data analysis / science tasks much easier. In this specific case, Please note that my opinion on Presto might be slightly biased though, as I’ve always been a big fan of Apache products in general. This is making me think that I should probably write an article on Apache Superset, one of my farourite data exploration and visualization platforms.

Anyway, I quickly found a handful of string functions in the official documentation and was able to identify several clusters of character strings that shared high similarities. Problem solved!

Alright, but why use TypeScript and not Presto for this article then? Long story short, I have recently started learning TypeScript and like many other programmers before me, I have fallen in love with it. Besides, the JavaScript (and therefore TypeScript) ecosystem is home to literally a ton of amazing packages: if you can think of it, then it probably exists in some shape or form somewhere on npm. So if you’re looking for a strongly typed language that you can use for your data science projects, then look no further.

One quick note before we start: this article is going to be very beginner friendly. Nothing complicated, all we want to do here is come up with a quick and efficient way of assessing whether two strings can be reasonably considered to be similar or not.

He’s going the distance

So what exactly are we trying to do here? Let’s consider the following terms:

"horse"

"hoarse"

"horst"

"pizza"

The syntax for the first three terms sure looks somewhat similar, and yet they each convey a completely different meaning. As for the last of our four terms, not only is its meaning again unrelated, but it also looks completely different from the other words.

To try and compare these terms, we could take several approaches. We could for instance extract their corresponding part-of-speech tag, but that would be a pretty pointless exercise as they’re all nouns. We could also try to find a list of synonyms and see if some of these words match. For a lot of reasons, I don’t think that we’d be getting any good results this way either.

Or, we could simply use a string similarity metric, and compute a matrix of distances between all our terms. What these metrics do, is calculate the extent to which two strings are similar to one another. The resulting distance score is, as one can imagine, very limited in its practical applications. I wouldn’t recommend using any of these distance metrics for matching sentences, as most human languages are word-order dependent. But they can produce good enough results for some very specific cases, like spotting minor typos, or looking for similar-looking emails addresses as discussed in the opening lines of this article.

One last time, these metrics do not provide any information regarding the meaning of each term. For instance, the terms "dog" and "hound", though related, would likely return a value close to zero.

Talisman

Though there’s a ton of very interesting NLP-focused npm packages that data scientists can use, I find Talisman to be the most comprehensive when it comes to distance metrics and algorithms.

According to its creator, Talisman is:

"[..] a JavaScript library collecting algorithms, functions and various building blocks for fuzzy matching, information retrieval and natural language processing."

Their whole website may look a bit unimpressive at first glance, but there’s really more than meets the eye there. Talisman features a lot of useful tools that aren’t limited to the field of natural language processing. I highly recommend you to dig a bit deeper into their official documentation if you have some time, and check their list of currently supported inflectors, keyers and phonetics. Back to today’s article, we could probably use either of the following two metrics:

-

The Jaccard index is defined as the size of the intersection divided by the size of the union of the sample sets (thanks Wikipedia!). It returns a floating point between 0 and 1, with similar sample sets scoring a value of 1.

-

The Jaro–Winkler distance which also calculates the distance between two strings. Once again, the higher the value, the more similar the two sample sets. This is the metric that we’ll be using throughout this article.

To get started, simply npm i- the package, and import it as follows:

import jaroWinkler from "talisman/metrics/jaro-winkler";

Comparing two terms couldn’t be easier, as all we have to do is pass a pair of strings as arguments into the jaroWinkler() function:

const x: string = "raspberry";

const y: string = "strawberry";

const distance: number = jaroWinkler(x,y);

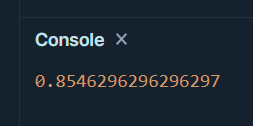

console.log(distance);

As expected, we obtain a score, which in this case confirms that our first two strings do share some similarities but aren’t identical either. Please note that we could also compare sentences instead of individual terms if we wanted to, as long as these sentences are in string format:

const x: string = "I love fruits";

const y: string = "I adore raspberries";

const distance: number = jaroWinkler(x,y);

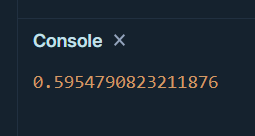

console.log(distance);

Oh and, while we’re here: the order of the characters within our pair of strings does matter. The example below shows that when fed a pair of anagrams, the returned Jaro-Winkler distance value is lower than 1:

const x: string = "raspberry";

const y: string = "byerarsrb";

const distance: number = jaroWinkler(x,y);

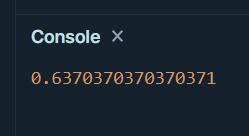

console.log(distance);

Now that’s all fine, but what if we had a larger array of strings whose similarity we wanted to compare against each other? Something like this:

const corpus: string[] = [

"I love fruits",

"I adore raspberries",

"TypeScript is cool",

"Programming is fun",

"Julien loves pizzas"

];

To do so, we can write a simple function that will loop through an array of terms, then compute the distance between each n element and all the elements contained within this same array. Our result scores will then be stored inside a new object named struct that we need to define first:

type distances = {

[key: string]: number[];

};

The function below is, I believe, pretty simple. I got stuck for a little while and kept getting this error message: TypeError: Cannot read properties of undefined (reading 'push'). After asking for help from the TypeScript community on Reddit, this can be avoided by checking for existing keys within the distances type:

const getMatrix = (data: string[]): distances => {

let inc: number = 1;

let sent: string = "Sentence_";

let struct: distances = {};

for (let da of data) {

for (let d of data) {

let key: string = `${sent}${inc}`;

if (!struct[key]) {

struct[key] = [];

}

let jaro: number = jaroWinkler(da,d).toFixed(2)

struct[`${sent}${inc}`].push(inc)

}

inc ++;

}

return struct;

}

const matrix: distances = getMatrix(corpus);

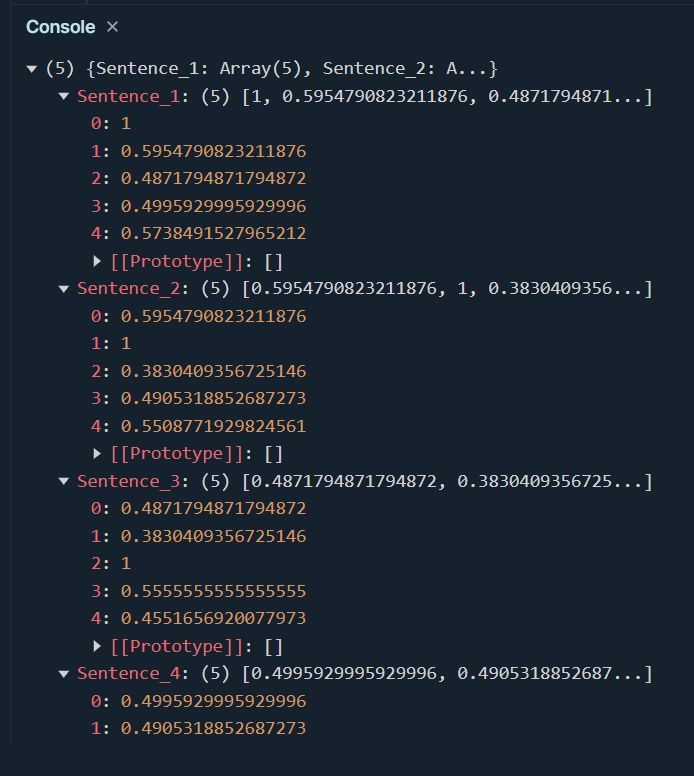

console.log(matrix)

That’s pretty cool right? But there’s at least one issue with the code above:

The only reason why the returned array of arrays is readable is because we looped through a small sample of four strings. What if we had worked with, say, 150 sentences or email addresses instead? Or thousands? Would we have been able to spot any potential correlations?

Distance lends enchantment and dataframes

Alright, so we now have some input data, and a function named getMatrix() that returns an array of objects where the keys for these objects refer to the order in which our strings are positioned within the data:

string_1, string_2, string_3, etc..

This specific structure seems to me like a perfect fit for a simple dataframe object. Let’s start by converting our array of objects into a dataframe object. For this, we’ll be using a great data toolkit package named Arquero. If you’re not familiar with this great library yet, I highly encourage you to read both the official documentation as well as an article that I wrote earlier this year and that shows how to perform basic-to-intermediate data manipulations.

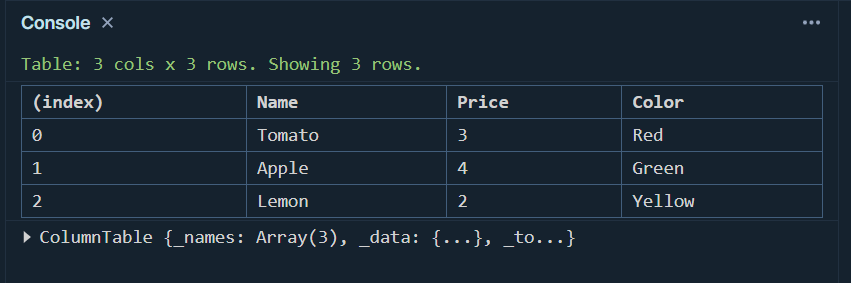

Here’s the easiest way to create a dataframe object:

import { all, desc, op, table } from 'arquero';

const d = {

Name: ["Tomato", "Apple", "Lemon"],

Price: [3,4,2],

Color: ["Red","Green","Yellow"]

}

const getTable = (data) => {

const dframe = table(data)

console.log(dframe.print())

}

getTable(d);

This means that we’re going to have to slightly modify our getMatrix() function, so that it incorporates a new key and values pair. This new pair will contain all the unique string names that we discussed earlier:

string_1, string_2, string_3, etc..

Let’s see how we can do this:

type distances = {

[key:string]: number[] | string[];

};

const getMatrix = (data: string[]): distances => {

let inc: number = 1;

let sent: string = "Sentence_";

let struct: distances = {};

const sentences: string[] = data.map(x => sent + inc++);

struct["Index"] = sentences;

inc = 1;

for (let da of data) {

for (let d of data) {

let key: string = `${sent}${inc}`;

if (!struct[key]) {

struct[key] = [];

}

let jaro: number = jaroWinkler(da,d).toFixed(2)

struct[`${sent}${inc}`].push(jaro)

}

inc ++;

}

return struct;

}

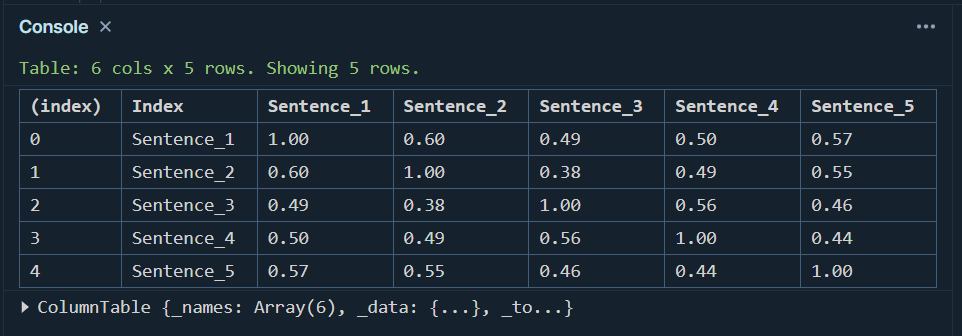

This slightly amended getMatrix() function can now be used to create a dataframe object:

const getTable = (data: distances) => {

const dframe = table(data)

console.log(dframe.print())

}

const matrix: distances = getMatrix(corpus);

getTable(matrix);

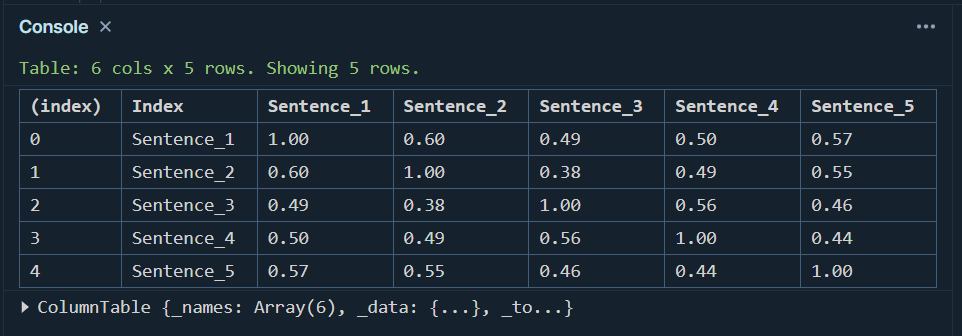

That’s already much better, right?

In the not-so-distant future

Visualising our data in table format has made it much easier to understand the potential correlations that we referred to in the previous section.

But I think we could do even better: we could for instance use one of JavaScript’s many data visualisation packages and create a heatmap plot for our array of arrays. Darker colours would indicate strong similarities, and make identifying similar character strings even easier.

This article being already way too long for its own good, let’s stop here for today though. Thanks for reading!