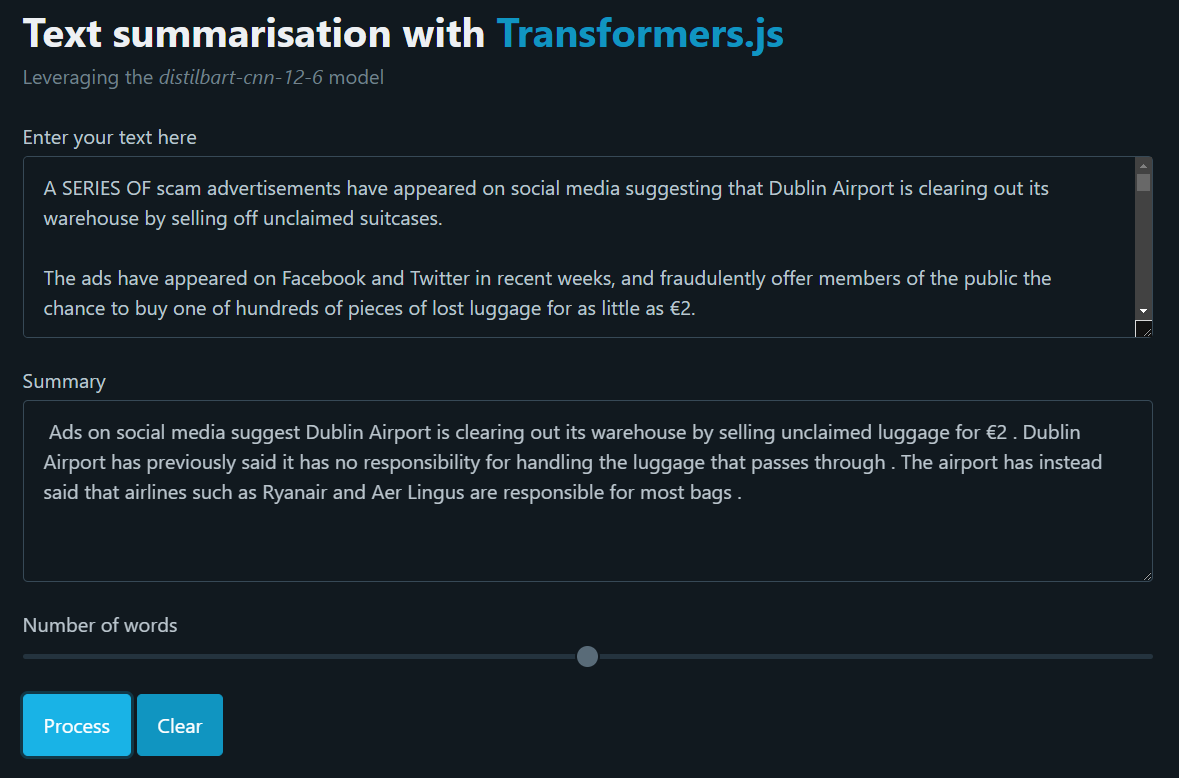

Text Summarisation in TypeScript With Transformers.js

An example of what we’ll be doing in this article

If you’re a long-time follower of this website, you probably know by now how much I’ve been advocating for the use of JavaScript (and TypeScript) as a second language for any data practitionner that might want to broaden their horizon and learn some new and useful skills.

I was therefore very excited when HugginFace recently announced that they would soon be porting their state-of-the-art transformers libraries to the JavaScript ecosystem. Back in 2021, Google had also started supporting JavaScript as one of the languages to work with their flagship deep learning framework TensorFlow. Yes, you have read correctly: not R, not Julia. Just Python, and now JavaScript! We even now have some very good learning materials, such as “Learning TensorFlow.js” from Gant Laborde:

The goods news here is that we won’t need to go through a 300+ page book to start having fun with HugginFace’s Transformers.js package. Its learning curve is, luckily enough, much smoother than TensorFlow’s. Besides, the fact that its main modules can be called directly on the client side through a Content Delivery Network is just the icing on the cake.

Right now, Transformers.js supports multiple natural language processing tasks such as text classification, text generation, translation, plus a ton of other cool stuff. It can actually even write some poetry for you if like!

What we’re going to do today is perform some simple text summarisation using the TextRank ranking algorithm, and then see how we can leverage Transformers.js’s summarization pipeline to try and improve on our initial process.

Text summa..what?

Text summarisation is a pretty self-explanatory concept. Of all the popular natural language processing tasks, it is probably the easiest to understand for a non-tech audience: say you have a document and you want a shorter a version of it, but you also want to make sure that the shorter version keeps the essential information that your main document contains.

Though text summarisation seems like a fairly simple concept, it nonetheless presents some interesting challenges:

- How do we estimate what an acceptable size for a summary might be?

- How do we filter out the non-essential information?

- How do we evaluate the accuracy and relevance of the summary?

Let’s answer the first two questions first. Roughly speaking, you’ll mainly encounter two types of text summarization: abstractive summarization techniques and extractive summarization techniques. Though we won’t go into too much details as this is not the purpose of this article, we’ll be focusing mainly on the second technique (the first one being much more complex).

Traditionally, we’re aiming for a two-phase approach that combines keyword extraction and topic modeling. The first phase creates a summary of the most important parts of a document, followed by the creation of a second summary that represents a summary of the document.

If you’re not too familiar with topic modeling, I’ve written a few articles that you might want to briefly go through:

- BERTopic, or How to Combine Transformers and TF-IDF for Topic Modelling

- Evaluate Topics Coherence With Palmetto

- Topic Modelling Visualisation With AnyChart.js

- Topic Modelling - Part 1

- Topic Modelling - Part 2

Likewise, we won’t be discussing the third question in this article, but if you’re looking for an evaluation framework, you might want to look at Recall-Oriented Understudy for Gisting Evaluation, more commonly refered to as ROUGE:

Life before Attention became Everything

Before Transformers and LLMs took the world by storm, ranking models were the bread and butter of every NLP practitioner. You’ve probably come across TF-IDF, easily the most popular member of the ranking models family. But have you ever heard of TextRank and its multiple siblings?

The reason why I’m using the word siblings is that there are actually various text summarization algorithms, like LexRank or TextRank.

To simplify, TextRank came up in 2004 when Rada Mihalcea and Paul Tarau published a research paper entitled TextRank: Bringing Order into Texts.

It starts by creating word embeddings for each sentences within a given corpus, goes on and calculates sentence similarities and stores them in a similarity matrix. Later, this matrix gets converted into a graph. How you get a summary is then simple: the model takes the sentences that rank at the highest in the graph.

Naturally, this approach has its flows. As each sentence in the input document is given a score based on its relation to other sentences within that document, pre-processing steps such as sentence splitting end up greatly affecting the quality of the output summary.

Besides, most summarisation algorithms are sensitive to the size of the text given as input. I’ve personally had issues using these ranking models to generate summaries of entire books.

Anyway, time to move onto some practical examples!

If you haven’t tried TypeScript yet, but have experience with Node.js, this short video will help you set up your environment. If you’re lost, bear in mind that the most important step here is to make sure that your tsconfig.json file is properly configured:

Now that we’re ready to go, we simply have to create a folder and npm i the TextRank-node package, which is one of the many JavaScript implementations of the ranking model that we just discussed.

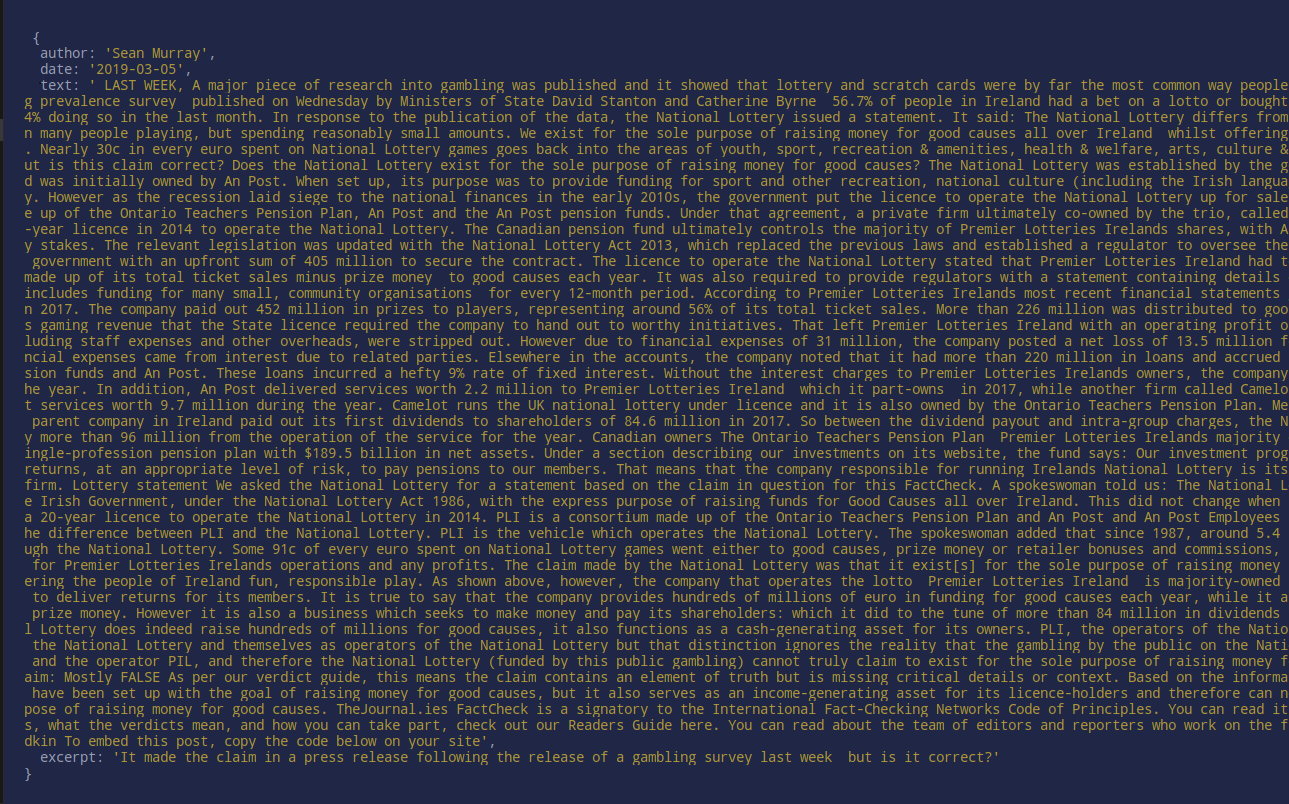

A few weeks ago, we saw how to scrape any news article using a Python library named Trafilatura. We managed to extract all the articles from the Fact-Check section of a popular Irish online newspaper called The Journal. If you remember, we saved all these articles to a single JSON file, where each entry contains the full article as well as its corresponding excerpt that we’ll be comparing our summary output against.

I have uploaded this JSON file onto my GitHub account, and this is what a random entry currently looks like:

const url: string = "https://raw.githubusercontent.com/julien-blanchard/datasets/main/fact_check_articles.json";

const fetchData = async (file: string) => {

const request = new Request(file);

return fetch(request)

.then(req => { return req.json() })

.then(results => {

let result: any = results;

let num_articles: number = Object.keys(result).length;

let random_article: number = Math.floor(Math.random() * num_articles)

console.log(result[random_article])

}

)

}

await fetchData(url);

We can now slightly amend our fetchData() function so that it returns a random article and its corresponding excerpt for any of the 667 articles that the JSON file contains:

const url: string = "https://raw.githubusercontent.com/julien-blanchard/datasets/main/fact_check_articles.json";

type Corpus = { [key: string]: string }

const fetchData = async (file: string) => {

const request = new Request(file);

return fetch(request)

.then(req => { return req.json() })

.then(results => {

let result: any = results;

let num_articles: number = Object.keys(result).length;

let random_article: number = Math.floor(Math.random() * num_articles);

let corpus: Corpus = {

Article: result[random_article]["text"],

Excerpt: result[random_article]["excerpt"]

}

return corpus;

}

)

}

const text: Corpus = await fetchData(url);

console.log(text);

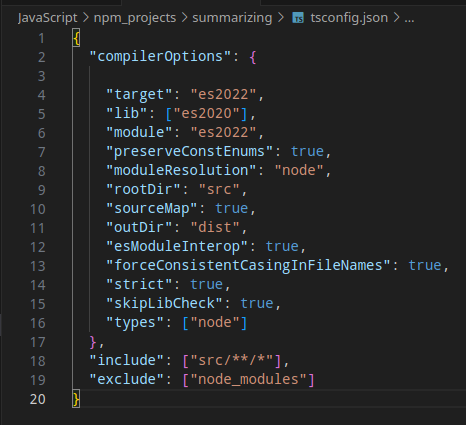

At this point, you’re likely going to see a Top-level 'await' expressions are only allowed... error message. Yeah, so there’s a whole thread on Stack Overflow as to why we’re hitting a wall here. As we’re really not building anything too complex, the best way to solve this problem is to simply modify your tsconfig.json file so that it resembles mine:

Now, TypeScript and the TextRank package aren’t exactly best friends, and you’ll probably get an error message when compiling your .ts file to plain ECMAScript. The good news is, this is only a warning and we’ll still get a nice index.js file or whichever name you chose to give it when initialising the NPM project. So don’t worry.

With this out of the way, let’s work on our first summary:

import * as tr from "textrank";

const text: Corpus = await fetchData(url);

let textRank: any = new tr.TextRank(text["Article"]);

let summary: string = textRank.summarizedArticle

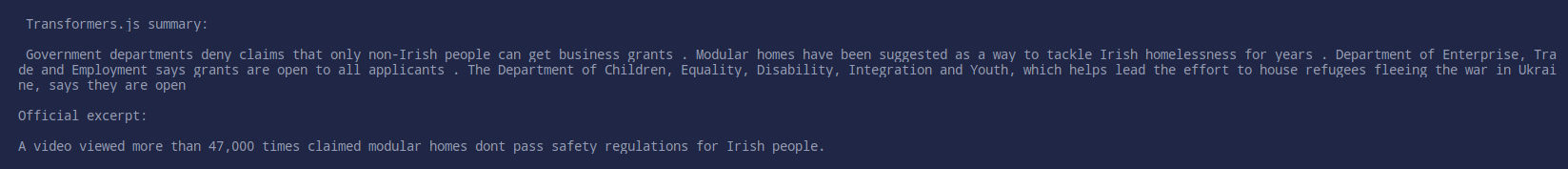

console.log(`TextRank summary:\n\n${summary}\n\nOfficial excerpt:\n\n${text["Excerpt"]}`);

That’s pretty straighforward, right? Now what we really want, is to be able to pass some parameters to our TextRank object. The good news is, TextRank-node allows us to tweak with its default settings, and manually set up the number of extracted sentences, pick our own similarity function, or wether we want the object to return a string or an array*:

type params = {

[key: string]: number | string

};

const getSummary = (text: string, n_sentences: number): string => {

let settings: params = {

extractAmount: n_sentences,

d: 0.95,

summaryType: "string"

}

let textRank: any = new tr.TextRank(text, settings);

let result: string = textRank.summarizedArticle;

return result;

}

const text: Corpus = await fetchData(url);

let summary: string = getSummary(text["Article"],3);

console.log(f,`TextRank summary:\n\n${summary}\n\nOfficial excerpt:\n\n${text["Excerpt"]}`,f);

Now, how about that? You have probably noticed one small issue in the screenshot above: we told our model to limit itself to 3 sentences, but it cheated by producing very long sentences. Let’s see if we can do better!

Transformers.js

According to Huggin Face’s website, Transformers.js sounds like a very promising project:

“State-of-the-art Machine Learning for the web. Run Transformers directly in your browser, with no need for a server!”

And what’s really great here, is that the Transformers.js package seems to be entirely based on its Python counterpart. In other word, we shouldn’t be missing any of the features that the comprehensive x offers, which confirms that this is not a x version of x popular library.

If you want some basic understanding of how the transformers architecture works, Dale Markowitz’s “Transformers, Explained: Understand the Model Behind GPT-3, BERT, and T5” is a good place to start:

Our articles will be fed to a distilbart-cnn-12-6 model (research paper), which was pre-trained specifically for text summarisation

import { HfInference } from "@huggingface/inference";

const getSummary = async (text: string, max_output: number): Promise<{[key: string]: string}> => {

let HF_ACCESS_TOKEN: string = "********";

const inference: any = new HfInference(HF_ACCESS_TOKEN);

const result: {[key: string]: string} = await inference.summarization({

model: "sshleifer/distilbart-cnn-12-6",

inputs: text,

parameters: {

max_length: max_output

}

})

return result;

}

const text: Corpus = await fetchData(url);

let summary: {[key: string]: string} = await getSummary(text["Article"], 80);

console.log(`Transformers.js summary:\n\n${summary["summary_text"]}\n\nOfficial excerpt:\n\n${text["Excerpt"]}`);

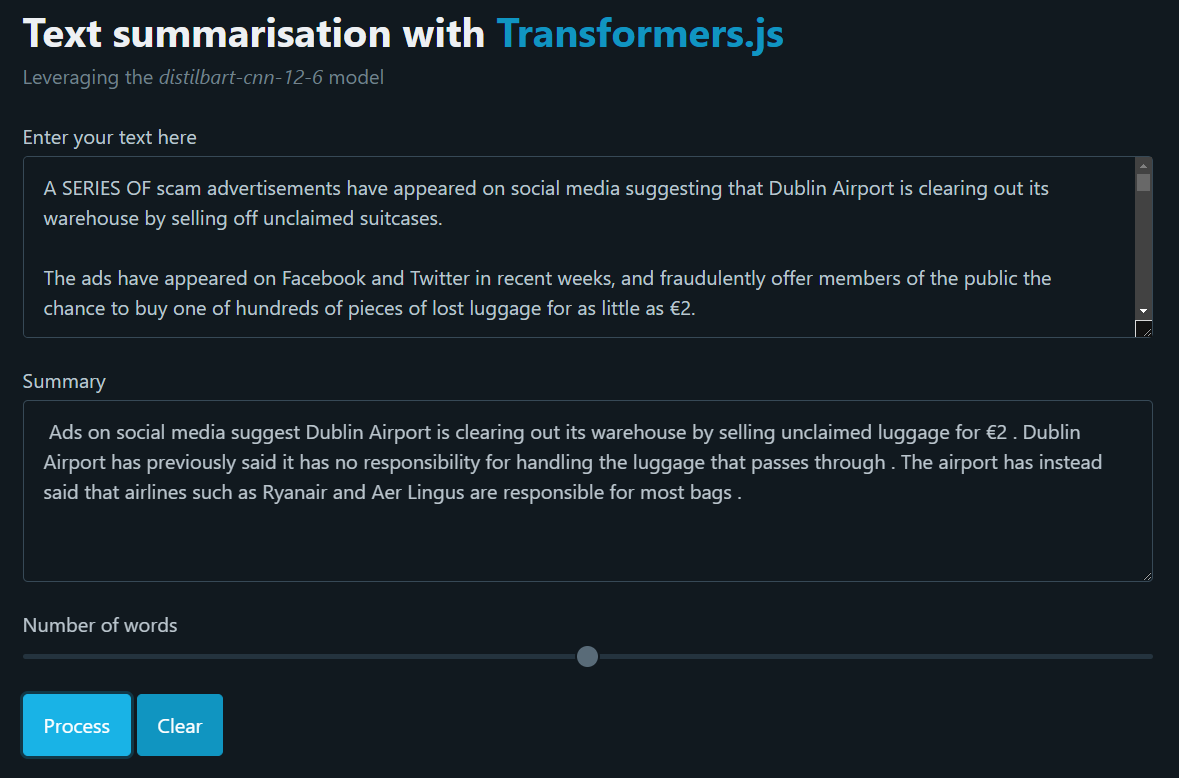

The summary that the pre-trained distilbart-cnn-12-6 model generated seems to match the original author’s excerpt. I have run the index.js files several times, tweaking with the max_length value and was amazed to see how close each summary was to the article’s corresponding excerpt.

On the down side, it tooks the model an average time of 2 seconds to process each of these moderate-sized articles, and we’re of course limited in the number of API calls that we can make on a daily basis.

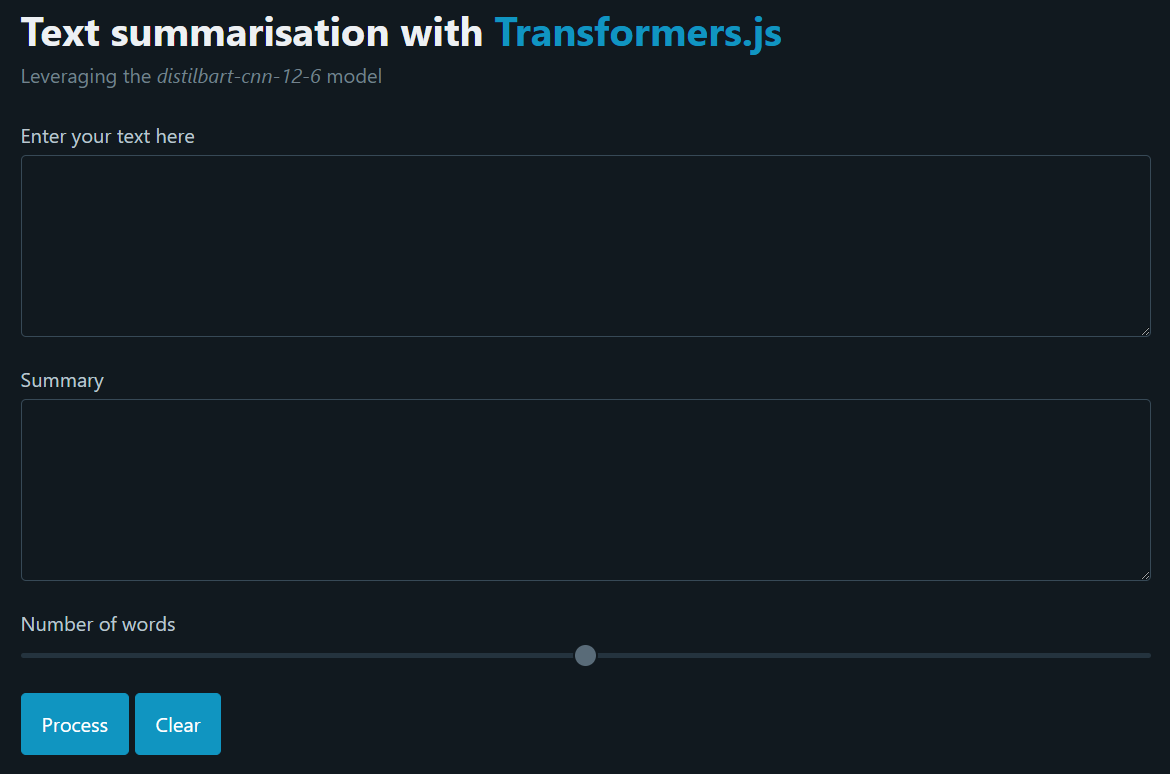

NLP in your browser

Here’s why we’ve been using TypeScript all along instead of Python: as briefly mentioned earlier, Transformer.js can be run directly in the browser. This means that we can easily embed it within any web application, website, or browser extensions!

A comprehensive list of supported tasks and models can be found here and includes natural language processing, computer vision, audio, etc..

Let’s start by building a simple html file, relying on Pico.css for the overall look and feel of our webpage. Feel free to check out this article I wrote last year if you’re not familiar with minimalist css frameworks. Name that html however you want to and paste what follows into it:

<!DOCTYPE html>

<html lang="en" data-theme="dark">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<link rel="stylesheet" href="https://unpkg.com/@picocss/pico@latest/css/pico.min.css">

<title>Text summarisation with Transformers.js</title>

</head>

<body>

<main class="container">

<div class="headings">

<h1>Text summarisation with <a href="" target="_blank">Transformers.js</a></h1>

<p>Leveraging the <i>distilbart-cnn-12-6</i> model</p>

</div>

<form>

<label>Enter your text here</label>

<textarea rows="5" cols="80" id="user_input"></textarea>

<label>Summary</label>

<textarea rows="5" cols="80" id="user_output"></textarea>

<label for="range">Number of words

<input type="range" min="50" max="100" value="75" id="num_words" name="range">

</label>

<a href="#" role="button" id="click_process">Process</a>

<a href="#" role="button" id="click_clear">Clear</a>

</form>

</main>

</body>

</html>

Our simple website looks pretty neat, all we have to do now is slightly amend our TypeScript functions and add in a few event listeners. Right after the </main> tag but still within the <body></body> elements, simply paste in the following lines of JavaScript code:

<script type="module">

import { HfInference } from 'https://cdn.jsdelivr.net/npm/@huggingface/inference@2.6.4/+esm';

let process = document.getElementById("click_process");

let clear = document.getElementById("click_clear")

const getSummary = async () => {

let text_input = document.getElementById("user_input").value;

let n_words = document.getElementById("num_words").value

let text_output = document.getElementById("user_output");

let HF_ACCESS_TOKEN = "********";

const inference = new HfInference(HF_ACCESS_TOKEN);

const result = await inference.summarization({

model: "sshleifer/distilbart-cnn-12-6",

inputs: text_input,

parameters: {

max_length: n_words

}

})

text_output.innerHTML = result["summary_text"];

console.log(result["summary_text"]);

}

const clearInput = () => {

document.getElementById("user_input").value = "";

document.getElementById("user_output").value = "";

}

process.addEventListener("click", getSummary)

clear.addEventListener("click", clearInput)

</script>

Reload the html page, paste some random article into the first text box (here’s the one I’m using ), hit the Process button and you should see a summary of your input text show up in the second text box.

That’s it for today! Feel free to reach out to me if you need any help!

Full code:

<!DOCTYPE html>

<html lang="en" data-theme="dark">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<link rel="stylesheet" href="https://unpkg.com/@picocss/pico@latest/css/pico.min.css">

<title>Text summarisation with Transformers.js</title>

</head>

<body>

<main class="container">

<div class="headings">

<h1>Text summarisation with <a href="" target="_blank">Transformers.js</a></h1>

<p>Leveraging the <i>distilbart-cnn-12-6</i> model</p>

</div>

<form>

<label>Enter your text here</label>

<textarea rows="5" cols="80" id="user_input"></textarea>

<label>Summary</label>

<textarea rows="5" cols="80" id="user_output"></textarea>

<label for="range">Number of words

<input type="range" min="50" max="100" value="75" id="num_words" name="range">

</label>

<a href="#" role="button" id="click_process">Process</a>

<a href="#" role="button" id="click_clear">Clear</a>

</form>

</main>

<script type="module">

import { HfInference } from 'https://cdn.jsdelivr.net/npm/@huggingface/inference@2.6.4/+esm';

let process = document.getElementById("click_process");

let clear = document.getElementById("click_clear")

const getSummary = async () => {

let text_input = document.getElementById("user_input").value;

let n_words = document.getElementById("num_words").value

let text_output = document.getElementById("user_output");

let HF_ACCESS_TOKEN = "********";

const inference = new HfInference(HF_ACCESS_TOKEN);

const result = await inference.summarization({

model: "sshleifer/distilbart-cnn-12-6",

inputs: text_input,

parameters: {

max_length: n_words

}

})

text_output.innerHTML = result["summary_text"];

console.log(result["summary_text"]);

}

const clearInput = () => {

document.getElementById("user_input").value = "";

document.getElementById("user_output").value = "";

}

process.addEventListener("click", getSummary)

clear.addEventListener("click", clearInput)

</script>

</body>

</html>