Simplify Website Scraping With Trafilatura

Below is an example of what we’ll be doing in this article:

In early 2022, I wrote a very basic Python program to scrape some articles from an Irish website named The Journal. Long story short, all I needed at that time was to capture the content of Covid-related articles as well as their attached user comments, and attempt to train a model on that data.

A bit less than six months later, that simple .py file suddenly started returning errors, and eventually stopped working altogether. What actually happened is pretty common: not only had The Journal made some very slight tweaks to the overall design of their website, but they had also changed the naming convention for most of their html tags. A few months later, they eventually ended up moving all these articles under a dedicated Fact-check section, with tons of comments that I should actually use now to train the aforementioned model.

But the truth is, adapting to these constant design changes requires a ton of efforts, and doesn’t scale well if you intend to work with more than a few different sources.

So if like me you need to do some basic website extraction, and are growing tired of maintaining the same scripts, you might have wondered if there wasn’t an easy-to-use library that could understand the html structure of a webpage for you. Well this is exactly what Trafilatura was built to do, and we’re going to see how to perform some basic content extraction in just a few lines of code.

Not as easy as duck soup

To better illustrate how tedious web scraping can sometimes be, let’s go through a simple example. Say we have a website that we want to extract content from, like for instance a review for one of the greatest novels ever written by Vladimir Nabokov. By leveraging a popular html parser like Beautiful Soup alongside the Requests library, we can write a simple function to try and store some basic information from this article:

from bs4 import BeautifulSoup

import urllib

import requests

from pprint import pprint

def getArticle(url):

page = requests.get(url)

soup = BeautifulSoup(page.content, "html.parser")

struct = {}

struct["Published"] = soup.find("span", class_="dcr-u0h1qy").text.strip()

struct["Title"] = soup.find("h1", class_="dcr-y70mar").text.strip()

struct["Author"] = soup.find("div", class_="dcr-172h0f2").text.strip()

struct["Excerpt"] = soup.find("div", class_="dcr-jew0bi").text.strip()

struct["Content"] = " ".join ([s.text.strip() for s in soup.find_all("p", class_="dcr-19m3vvb")])

return struct

article = getArticle("https://www.theguardian.com/childrens-books-site/2016/mar/01/lolita-vladimir-nabokov-review")

pprint(article)

Now that’s all fine, as in, this script will work. At least for a while. But we just targeted a single website, where all pages look like one another. And yet we had to look for the specific html structure of the page, identify the key elements, their class or id names, etc.. But what if we were instead looking for a specific theme or keyword, and wanted to retrieve the content of multiple articles spread across several different websites?

For instance, let’s look for more reviews of other great novels written by Nabokov. A quick Google search has returned quite a few results, and as can be expected, all the websites that you can see in the screenshot below have a very distinct html structure. Following the approach that we just saw, we would then have to refactor the above code for each different website that we want to target. On top of that, we’d have to make some deep changes to all that code whenever one or several of these websites modify their design.

Arguably, an alternative to all this mess would be to combine a bunch of different APIs for whatever type of content that we want to extract. There are APIs for pretty much everything these days, including news articles (News API), job postings (Google’s Job Search), etc..

An arguably better and more straightforward approach though, is to utilise a much more simple and user-friendly library like Trafilatura.

Make my life easier please, Trafilatura

So, what is Trafilatura you might wonder? According to its official website, this Python library was created in 2021 as a:

"[..] command-line tool designed to gather text on the Web. It includes discovery, extraction and text processing components. Its main applications are web crawling, downloads, scraping, and extraction of main texts, metadata and comments."

Sounds exactly like what we need, right?

When I wrote earlier that this library was simple to use, I really meant it. To extract the base content as well as some metadata from a simple webpage, all we need to do is pass a url through Trafilatura’s fetch_url() function and then use the extract() wrapper:

from trafilatura import fetch_url, extract

from pprint import pprint

article = "https://www.independent.co.uk/independentpremium/culture/book-of-a-lifetime-lolita-by-vladimir-nabokov-b2407896.html"

downloaded = fetch_url(article)

result = extract(downloaded)

pprint(result)

Now what you want is Trafilatura to either return a json or an xml object, and it’s entirely up to you to choose whatever format you prefer. By default, this object will contain a fixed number of keys, that might or might not contain corresponding values depending on whether the program was able to extract that information from the targeted webpage or not.

What’s really neat, is that the extract() function can take in a ton of parameters, and I strongly suggest reading through the list of supported arguments if you want to know more. My default setup usually tends to look like this:

from trafilatura import fetch_url, extract

import json

from pprint import pprint

def getContent(url):

downloaded = fetch_url(url)

result = extract(

downloaded,

include_formatting=True,

output_format="json",

include_links=True,

include_comments=True

)

return json.loads(result)

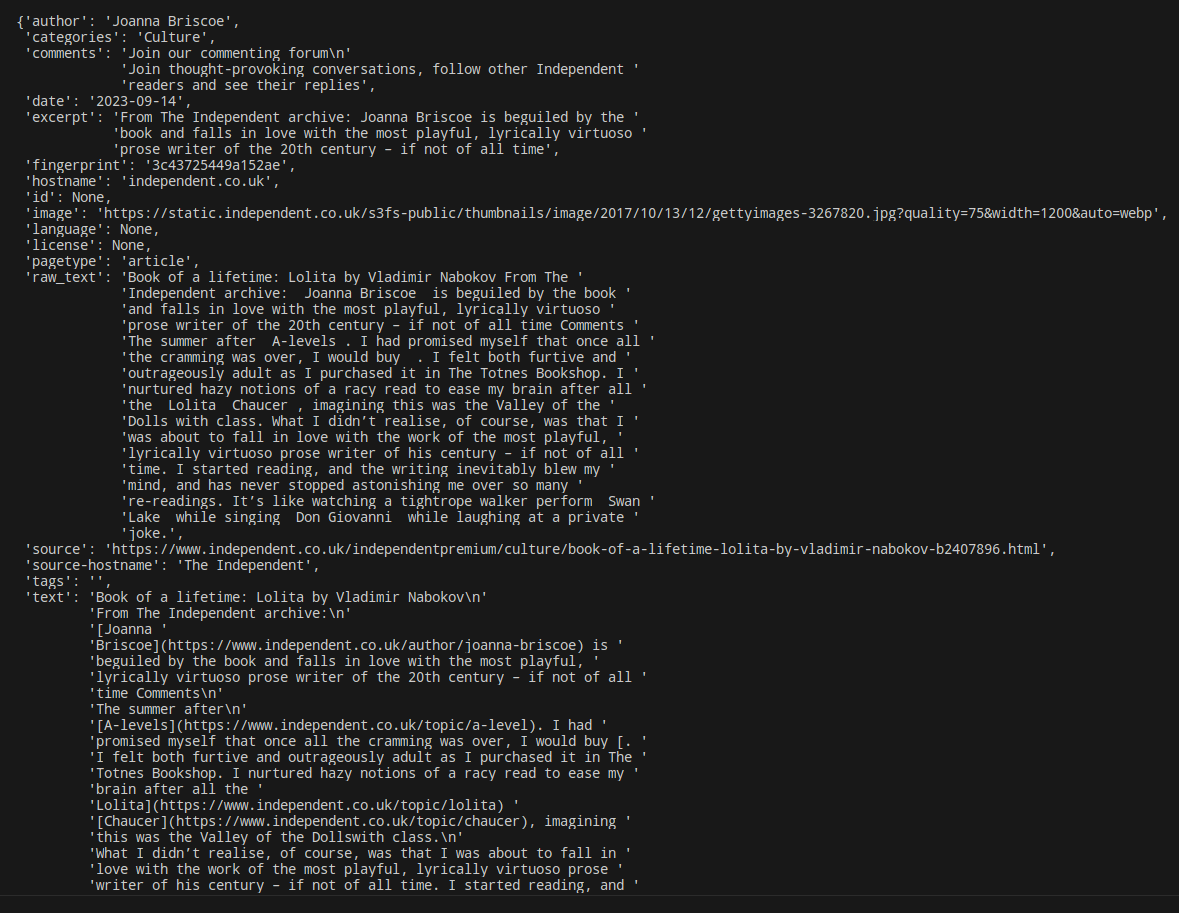

scraped = getContent("https://www.independent.co.uk/independentpremium/culture/book-of-a-lifetime-lolita-by-vladimir-nabokov-b2407896.html")

pprint(scraped)

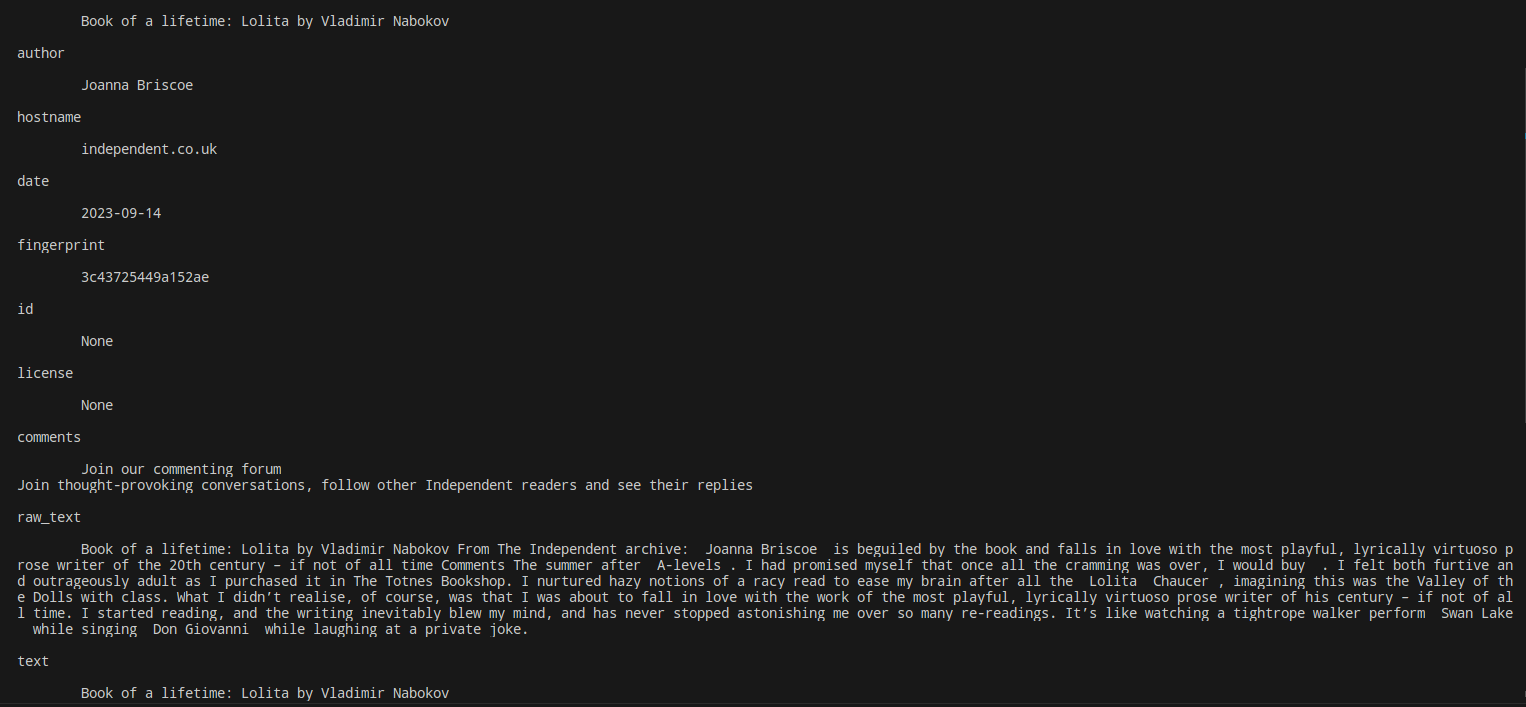

As you can see, we now have an object with key / value pairs, that we can arguably better visualise this way:

for k,v in scraped.items():

print(f"{k}\n\n\t{v}\n")

Oh that article looks interesting

I was finishing my last year of MA at the University of Bordeaux back in the late 2000s when I first got interested in computational linguistics, as it used to be called back then. Like most of my classmates, I found myself having to collect and process tons of online papers and articles for my disseration thesis, and quickly felt overwhelmed by the amount of work it took to keep a record of all these materials. I didn’t really know about programming back then (not that I would consider myself much more knowledgeable today), but I clearly remember thinking that my life would have been much easier if I had had access to a simple tool to process and archive online resources.

Say that we have a personal interest in a given topic, or we are we looking for a specific job title and we want to understand the job market landscape. While doing some research on the internet, we’re probably going to come across tons of articles from multiple sources. I’d say most people (including myself) will either create a dedicated folder in their favourite browser and bookmark these urls, or save the links to these urls in some note-taking app.

But what if we instead created a simple command line interface that saves each webpage we want to read into a SQL database or a json file? That sounds like something fairly easy to implement, as I’m sure we’ll only need to write a short Python script as well as a few lines of Bash to glue the whole thing together.

We’ll be needing the Sys standard library in a couple of minutes, so we might as well import it now:

import sys

from trafilatura import fetch_url, extract

import json

def getContent(url):

downloaded = fetch_url(url)

result = extract(

downloaded,

include_formatting=True,

output_format="json",

include_links=True,

include_comments=True

)

return json.loads(result)

We can then create a json file, using a function that we’ll only call the very first time that we run this script:

def createJSON(file_name):

struct = {

"articles": []

}

with open(file_name, "w") as json_file:

temp_struct = json.dump(struct,json_file)

That json file is currently empty, but the function below will append any new article to it:

def updateJSON(file_name, article):

with open(file_name,"r+") as json_file:

json_updated = json.load(json_file)

temp_struct = {}

kept = ["author","title","date","excerpt","source","raw_text"]

for k,v in article.items():

if k in kept:

temp_struct[k] = v

json_updated["articles"].append(temp_struct)

json_file.seek(0)

json.dump(json_updated, json_file, indent= 4)

Finally, let’s bundle everything together, using sys.argv[1] to get Trafilatura to fetch any url that we’ll want to paste into the command line invite:

if __name__ == "__main__":

#createJSON("articles.json") # we only need this when we run the script for the first time

try:

new_article = getContent(sys.argv[1])

updateJSON("articles.json", new_article)

except Exception as e:

print(e)

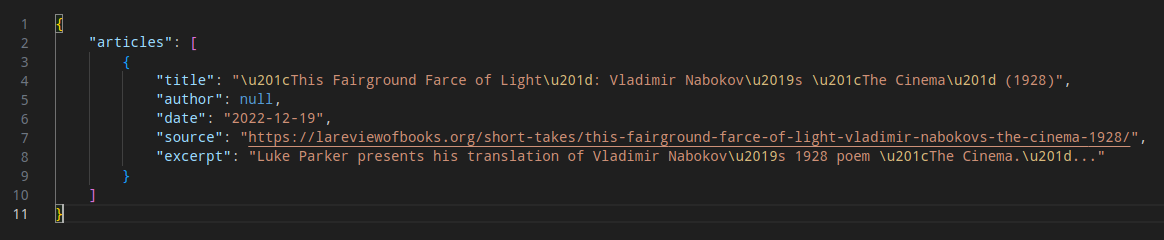

Here’s what happens when we type in python main.py http://example.com and replace the example.com url with a webpage we want to scrape:

What’s now left to do, is create a .sh file:

nano script.sh

And populate it with the most simple Bash script that’s ever been written in the history of programming:

#! /bin/bash

cd /home/path_where_your_venv_folder_is_located

source bin/activate

read -p "Enter a url: " article_url

python main.py $article_url

deactivate

Of course, let’s grant this file the permissions it’ll need to run:

sudo chmod 755 script.sh

And we’re good to go! When we type ./script.sh in our terminal, we are now prompted to enter a website:

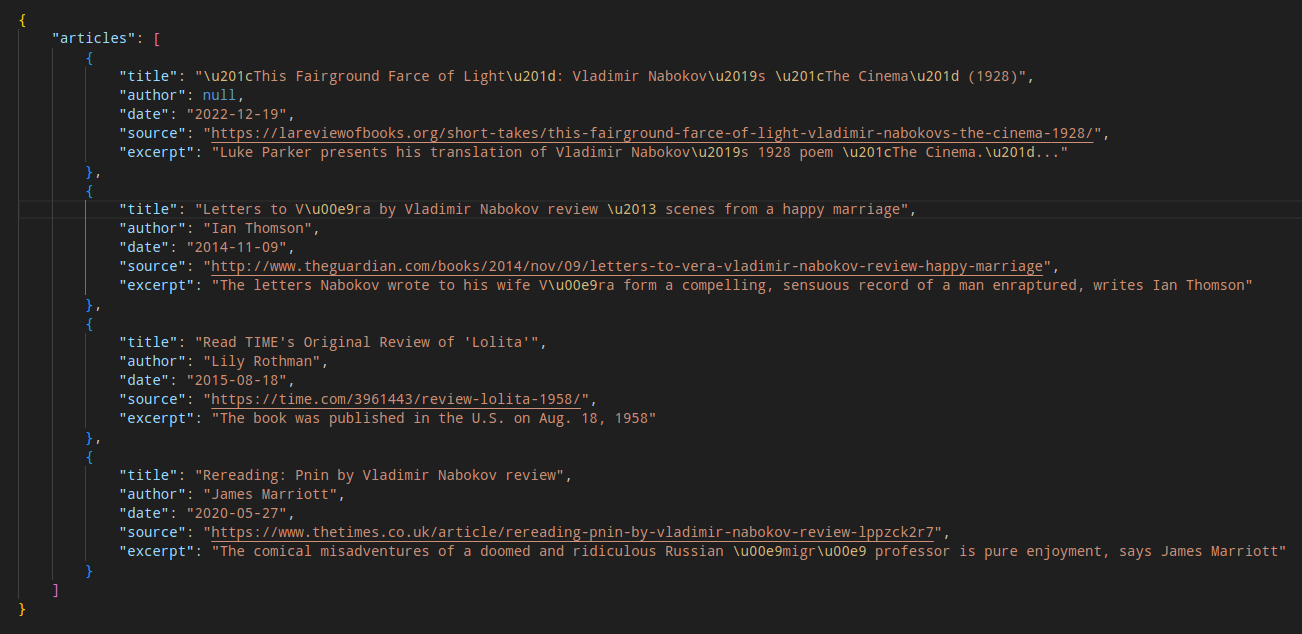

The Python script runs, meaning that our article has been scraped and added to the json file! We can confirm this by opening the json file:

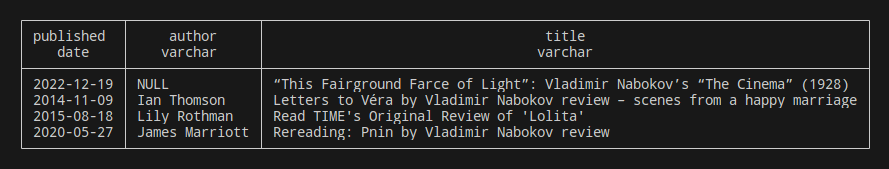

That doesn’t look too great though, so what we could do at this point is use a database management system like DuckDB to try and convert this json file into tabular format. If you’re not familiar with DuckDB, I highly recommend you to check their comprehensive documentation page.

Really all we’re trying to do here, is turn our json file into a simple SQL table that we can query. It took me a while to figure out how to work with the specific format of our json file, until I found this great article which pointed me in the right direction:

import duckdb

query = duckdb.sql("""

WITH t1 AS (

SELECT

UNNEST(articles) AS article

FROM

read_json_auto('articles.json')

)

SELECT

article['date'] AS published

, article['author'] AS author

, article['title'] AS title

FROM t1

"""

)

print(query)

That’s much better!

Last but not least, Trafilatura’s official website has this page named “Notable projects using this software” where you will find some very interesting work made by third-party contributors and I’m sure will inspire you more than what we have just done.

See you in a few weeks for a new article!