Topic Modelling - Part 1

What follows is the first in a series of articles dedicated to Topic modelling. Please note that this article contains snippets of text and code from my dissertation thesis:

“Information extraction of short text snippets using Topic Modelling algorithms.”

While the next articles will likely be more focused on how to extract meaningful topics from unstructured data as well as on some frameworks that can be used to evaluate our results, this article will be mainly centered around the theoretical aspect.

Text, text everywhere!

As of 2017, there were approximately 3.7 billion active users on the internet [1]. Every day, some of these users will likely log into a social platform like Twitter or Facebook and engage with a company or a business whose service they use, and expect this company or business to be immediately able to understand and process their request, solve their issue, or provide them with an appropriate response.

Facebook claims that 6 in 10 local businesses say that “having an online presence is important for their long-term success” [2], while Twitter, currently has 396.5 million users, 326 of which are active on a daily basis [3].

This ever growing textual corpora has led to an increased need for more efficient ways of extracting text based information. If they want to be more reactive and help their leads make better decisions, data analysts must find ways to quickly and efficiently process that data.

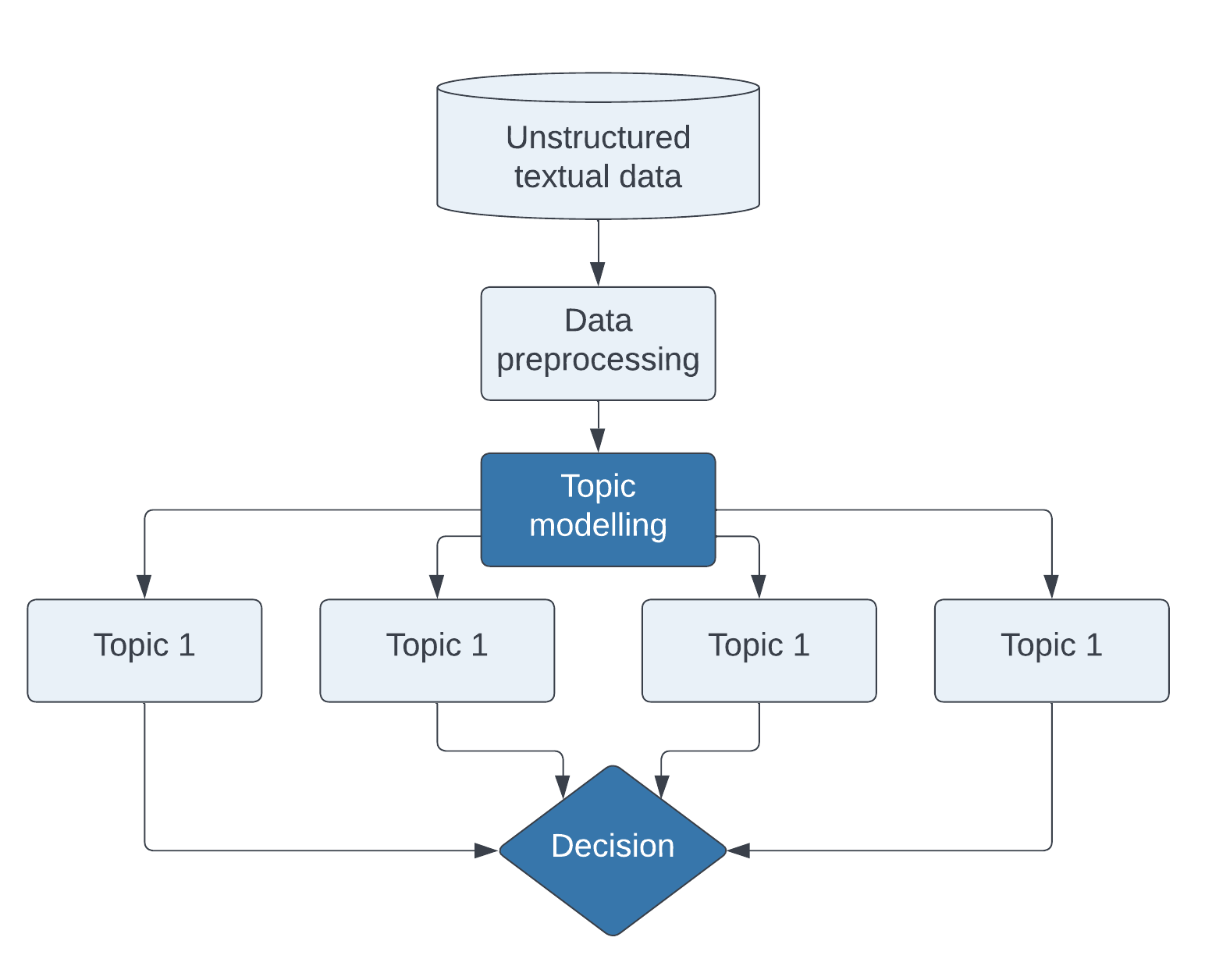

This information, if it is to be insightful and actionable, has to come in the form of buckets, or topics, that analysts will help understand and prioritise.

Why use dedicated topic modelling algorithms?

Multiple approaches have been tried in the past, including human crafted rule-based systems, or more statistical techniques that have helped reduce the need for human handcrafted rules but still depends largely on large human labelled text corpora. Other generic approaches, such as clustering models that can achieve great results for numeric values, might not be fully suited for returning high-accuracy insights for textual data.

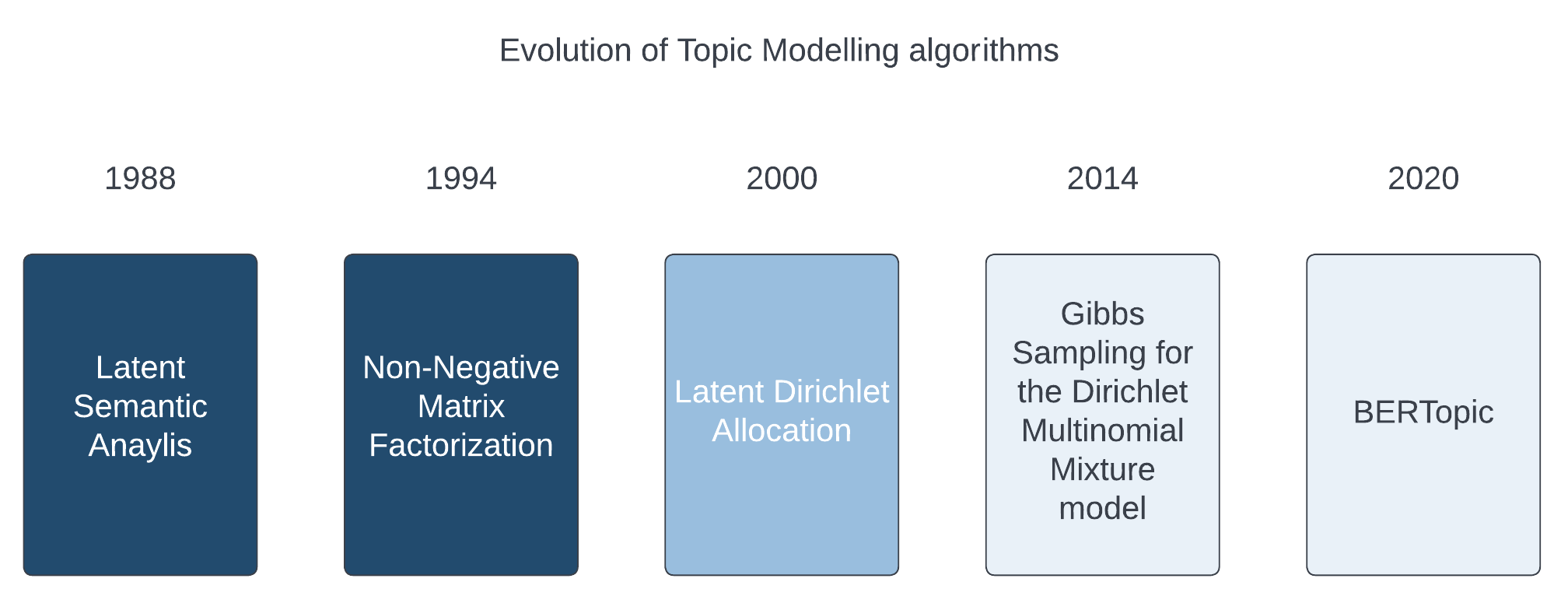

As a matter of fact, being able to correctly identify the relevant pieces of information contained within digital corpora remains a major challenge for linguists and computers alike. However, over the past few years, a series of topic modelling specific algorithms have emerged, each claiming to be able to solve the problems faced by traditional models and improve the quality of extracted topics.

Any data analyst should be able to process an unstructured text corpus through a wide array of unsupervised or textual data-specific machine learning models. As of July 2021, the most popular algorithms are:

- Latent Dirichlet Allocation

- Latent Semantic Analysis

- Non-Negative Matrix Factorization

- Gibbs Sampling Algorithm for Dirichlet Multinomial Mixture

- A mix of LDA and transformers with BERTopic.

We’ll see next week how these algorithms work and how we can use them to extract meaningful information from unstructured data.