Introduction to TF-IDF

Before reading the following article, you should probably take a look at my introduction to Topic Modelling. Please note that this blog post contains snippets of text and code from my dissertation thesis:

“Information extraction of short text snippets using Topic Modelling algorithms.”

While the next articles will likely be more focused on how to extract meaningful topics from unstructured data as well as on some frameworks that can be used to evaluate our results, this article will be mainly focused on the theoretical aspect.

The theory around TF-IDF

In Topic Modelling, Term frequency-Inverse Document Frequency (or TF-IDF) addresses the issue of some words being attributed a higher importance than others. Being able to determine the importance of a given word in comparison to other words within a whole document is key, and this is why TF-IDF is so often used for extracting relevant information from a corpus.

The logic behind TF-IDF is pretty straightforward: the frequency of a word corresponds to the number of times that this word appears in a document. If multiple documents are processed, and a word that appears in several of those documents, then its term frequency can be different from one document to another (Zhang, Yoshida and Tang, 2011).

Practical example

| Corpus | Text |

|---|---|

| Document 1 | “Linguistics is fun thing to learn, and so is programming. ” |

| Document 2 | “Programming is great, and I especially like writing about it.” |

| Document 3 | “Writing about linguistics is great, but I really prefer programming.” |

The term frequencies for the words “Dublin” and “students” can be easily calculated:

| Term | Frequency |

|---|---|

| tf(“Linguistics”) | 1 / 10 for Document 1 |

| tf(“Linguistics”) | 0 / 10 for Document 2 |

| tf(“Linguistics”) | 1 / 10 for Document 3 |

| tf(“Programming”) | 1 / 10 for Document 1 |

| tf(“Programming”) | 1 / 10 for Document 2 |

| tf(“Programming”) | 1 / 10 for Document 3 |

| tf(“Writing”) | 0 / 10 for Document 1 |

| tf(“Writing”) | 1 / 10 for Document 2 |

| tf(“Writing”) | 1 / 10 for Document 3 |

The table below is a simplified example, but TF-IDF would use term frequency to calculate numeric vectors associated with a set of documents as follows:

| Word | Document 1 | Document 2 | Document 3 |

|---|---|---|---|

| Linguistics | 10 | 0 | 10 |

| Programming | 10 | 10 | 10 |

| Writing | 0 | 10 | 10 |

Normalising the column vectors in the preceding table would then result in the following values:

| Word | Document 1 | Document 2 | Document 3 |

|---|---|---|---|

| Linguistics | .10 | .0 | .10 |

| Programming | .10 | .10 | .10 |

| Writing | .0 | .10 | .10 |

Meanwhile, using an asterisk symbol to represent the inner product of each pair of columns vectors, leads to the following final scores:

| Pair | Frequency | Score |

|---|---|---|

| Doc1 * Doc2 | (.10) * (.10) + (.10) * (.50) + 0 + 0 + 0 | 0.20 |

| Doc1 * Doc3 | (.10) * (.10) + (.10) * (.30) + (.50) * (.50) + 0 + 0 | 0.36 |

| Doc2 * Doc3 | (.0) * (.10) + (.10) * (.30) + 0 + 0 + 0 | 0.19 |

Hence, the documents doc1 and doc3 are most closely related, followed by the pair doc1 and doc2, and then the pair doc2 and doc3.

Why IDF (Inverse Document Frequency) is used is to attribute a lesser importance to words that are very common across a corpus (see Stopwords above) and attribute more importance to the rarer words, as all terms are given the same weight when Term Frequency is calculated (Aizawa, 2003).

Ultimately, the TF-IDF score is a product of these two terms: TF * IDF.

Practical use case

Now that we have a general idea as to how TF-IDF processes text documents, let’s see how we can process a simple corpus. You should first remove any stopwords from your corpus and lemmatize your document (don’t use a stemmer!), but none of these will be covered in this article.

- Lemmatizing is a pretty straightforward process anyway and NLTK’s built-in

WordNetLemmatizer()does wonders. - As for removing stopwords, you can find a lot of lists of words on GitHub in any language. This one will do the job, but I’m sure there’s better. I also like to remove abbreviations but that’s just a personal preference. If you want to follow my advice, then this list is quite thorough.

Our next step is to find some text, in this case the opening lines of Arthur Conan Doyle’s The Hound of the Barskervilles, which you can find on Gutenberg. I literally just picked the first couple of paragraph and assigned them to a variable named holmes.

Sherlock Holmes, who was usually very late in the mornings, save upon those not infrequent occasions when he was up all night, was seated at the breakfast table. I stood upon the hearth-rug and picked up the stick which our visitor had left behind him the night before. It was a fine, thick piece of wood, bulbous-headed, of the sort which is known as a “Penang lawyer.” Just under the head was a broad silver band nearly an inch across. “To James Mortimer, M.R.C.S., from his friends of the C.C.H.,” was engraved upon it, with the date “1884.” It was just such a stick as the old-fashioned family practitioner used to carry—dignified, solid, and reassuring. “Well, Watson, what do you make of it?” Holmes was sitting with his back to me, and I had given him no sign of my occupation. “How did you know what I was doing? I believe you have eyes in the back of your head.”

We can now import our modules, including the pprint library which outputs hierarchical structures such as matrices in a nicer way than the default print() method.

from nltk.tokenize import sent_tokenize

from sklearn.feature_extraction.text import TfidfVectorizer

import re

from pprint import pprint

The below function will help clean strings that were sourced from .txt files, replacing line jumps and double spaces with a single space. You’ll notice that we are using NLTK’s sent_tokenize() method. That’s because Scikit-Learn’s TfidfVectorizer() method will only accept lists of lists. In other words, if our text is:

“This is an article about TF-IDF. This article is on Julien’s blog.

Then the we want our getCorpus() function to return this:

[

["article about tf-idf"],

["article julien blog"]

]

As I’m sure you have already guessed from the list of lists above, we’re also removing punctuation signs, stopdwords (well, not this time, but ideally you should), and we’ve lowered all our characters.

def getCorpus(data):

corpus = data.replace("\n"," ")

corpus = corpus.replace(" ", " ")

corpus = sent_tokenize(corpus)

corpus = [c.lower() for c in corpus]

# corpus = [c for c in corpus if corpus not in stopwords]

corpus = [re.sub(r"[^\w\s]", "", c) for c in corpus]

return corpus

We’re finally ready to pass the list of lists that we have just created into our getVects() function. If you have used pretty much any of the Scikit-Learn models, then the fit() and transform() steps should have no secret for you.

def getVects(data):

vectorizer = TfidfVectorizer()

vectorizer.fit(data)

vects = vectorizer.transform(data)

return vects

corp = getCorpus(holmes)

vectors = getVects(corp)

Actually, TfidfVectorizer() takes several parameters that you can see here. I usually go with the following arguments:

vectorizer = TfidfVectorizer(

max_df=0.9,

min_df=0.1,

max_features=200000,

#stop_words=stopwords,

decode_error="ignore"

)

As you can see, we can add a limit to the number of features, we can pass our list of stopwords directly there (though this is slightly slower), while max_df and min_df will ignore terms that have a frequency higher or lower than the given threshold.

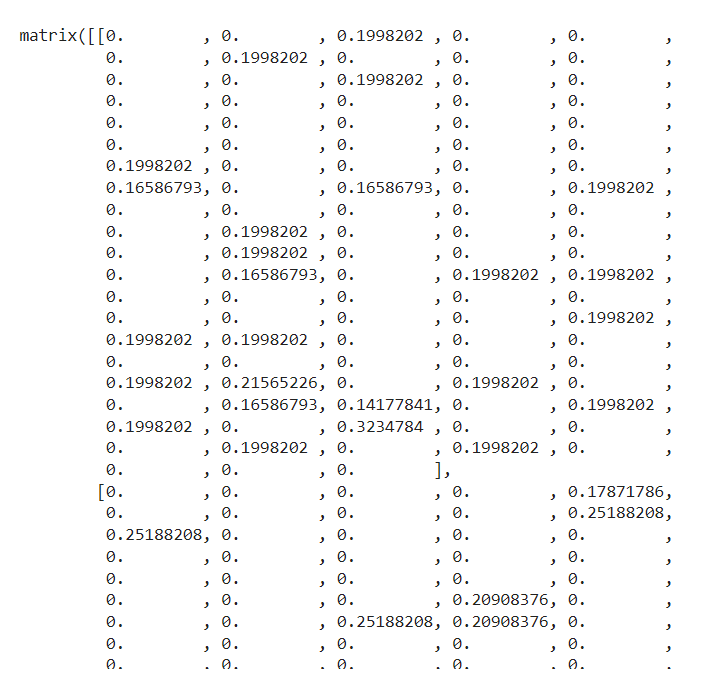

The result is a matrix object, where each sentence is a vector, and which you can view this way:

pprint(vectors.todense())

Final thoughts

Though the TF-IDF value can effectively output the frequency of individual terms, it is however much less effective to match a sentence across one or more documents. For sentences, a better solution is to use Word2vec. This algorithm provides word vectors that contain contextual information about each term, which is absent from TF-IDF values (Goldberg and Levy, 2014).