Using the Command Line to Extract Contextual Words From Textual Data

September 2008 was my first encounter with computational linguistics, as it used to be called back then. I was starting my second (and last year) of MA at the University of Bordeaux and as I began doing some research for my disseration thesis, I encountered a problem that I thought a computer would be able to help with.

What I was trying to do was to obtain, within a single text corpus, the n preceding and following words for any given word. My intuition was that there were some important words in the two novels I was studying that were more or less always accompanied by the same three of four words. To illustrate this, let’s consider the sentence below, taken from the final chapter of F. Scott Fitzgerald’s The Great Gatsby:

“So we beat on, boats against the current, borne back ceaselessly into the past.”

The two preceding and following words for “current” would be respectively ["against","the"] and ["borne","back"].

I didn’t really know about programming back then (not that I would consider myself much more knowledgeable today), but I clearly remember thinking that this would be a fairly easy task to perform, and I of course immediately went and asked my teachers if they could help with this. To my surprise, they had no clue how to switch on a computer achieve this sort of magical thing, and I was promptly redirected to the Departement Informatique (aka the Computer Science department). It is there, in a small room on the Pessac campus, that I first heard about the book that would change my life forever:

I borrowed it from the University library, and to say that I immediately felt completely lost would be an understatement. I tried for several weeks to understand concepts which to me back then made little sense, and eventually gave up my idea of extracting contextual keywords from the two novels that I was reading. I managed to complete my MA, but this experience felt for a long time like a missed opportunity to grow and learn about the fundamentals of scripting. It opened my eyes as to the multitude of things that could be done using a programming language, and I promised myself that I would get back to it one day and finally understand at least some of the concepts that I had discovered reading this book.

Today my friends, we are going to finally build the context extractor script that I had failed to write more than 15 years ago.

Bye Larry, hello Guido

As much as I have learnt to love Larry Wall over the years, we unfortunately won’t be writing the following lines of code in Perl or Raku. These are both great languages, but as I have been writing most of my scripts in either Python or JavaScript over the past couple of years, we’ll be using Python this time again.

That being said, as the purpose here is to avoid using any non-standard library, the final script should be very easy to port onto pretty much any language that offers libraries similar to these ones:

import os

import sys

import re

from collections import Counter

Actually, we don’t really need Counter and re. They just make a few of the following steps easier.

Listing the text files to process

What we need to think about first, is the type of content that our users will likely want to feed into our script. Back in 2008, I would have definitely used a .txt file, but you could easily tweak the following function to work with .epub or .docx documents if needed. More importantly, I would have wanted to place my Perl script in the exact same folder where I stored my .txt files, and to be able to see the content of that folder and pick the file I wanted to process.

To do so, we can simply use the os.listdir() function and loop through all the .txt files that are found within the folder where the script is stored. Note that we could use enumerate() instead of incrementing the integer value stored in ind, but I’m also conscious of the fact that similar functions might be supported across other languages.

def getFiles():

ind = 0

print(f"\nThe following file(s) were found:\n")

for f in os.listdir():

if f.endswith(".txt"):

ind+=1

print(f"\t{[ind]} {f}")

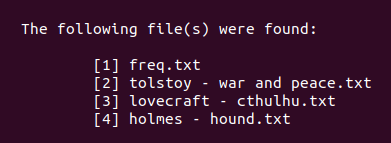

We should get something like this:

As you can see in the screenshot above, we currently have 3 files in .txt format than are just waiting to be processed by our script.

Content extraction

We now know what files we can choose to work with, but what’s our next step? Well, what we need is simply to transform the content of the input .txt file into a list of tokens. There are two ways we can do that:

- We read the .txt file line by line, and append each line to a string named

tokens. We then usere.sub()to first substract additional extra lines and blank spaces, and then create a list of tokens by splitting thetokensby blank spaces.

def getFromTxt(input_text):

tokens = ""

with open(input_text, "r") as file:

for f in file:

tokens += f

tokens = re.sub("\n+"," ",tokens)

tokens = re.sub(" +"," ",tokens)

tokens = [t for t in tokens.split(" ")]

return tokens

- Or, we could loop through each line, splitting the new current line by blank spaces, clean up the tokens by removing anything which isn’t a word, and append the said tokens to a list.

def getFromTxt(input_text):

tokens = []

with open(input_text, "r") as file:

for f in file:

for token in f.split(" "):

if "\n" not in token:

tokens.append(re.sub(r"[^\w\s]","", token))

return tokens

Whichever approach we pick, we’ll end up with a list of tokens.

Contextual words

As discussed earlier in this article, the main component that this script should feature, is to allow its users to get the n number of preceding and following words for any given word.

We therefore need three arguments:

text: the name of the .txt file to process.word: the word that the users want to extract contextual words for.howmany: by default set to 5, the n value of the preceding and following words.

Let’s start by calling the getFromTxt() we created a minute ago, pass the .txt file into it, and save its output into a list named tokens.

What we then need, is to loop through tokens, and save the indexing position for each instance of word that we find. Let’s consider the following sentence:

“Hey this is a great article and I really like this TexTract thing”

The position for the word “article” is 5, which means that to get the 2 preceding and following words within that sentence, we will simply need to retrieve the items at position [3,4] and [6,7]. We’re therefore saving the indexing position for each instance of word into a list called indexes. To make sure we’re capturing everything, we also need to .lower() both the tokens and the user’s input word.

What we can do next, is count the number of items within this list, and ask the user whether or not they want to continue. If they chose “y”, we then loop through our list of indexes and match the positioning of each instance of word against the tokens list. If that’s not clear, let’s consider the following sentence again:

sentence = "Hey this is a great article and I really like this TexTract thing"

If we wanted to output the words “great” and “article”, we could:

-

Pass the string into a list:

sentence_list = [s for s in sentence.split(" ")] -

Use the

enumerate()method to obtain both the indexing position and the words:sentence_indexes = [i for i,s in enumerate(sentence_list) if s == "great" or s == "article"]. -

Print

sentence_indexeswhich will output[4,5]. -

We then loop through this freshly created list of integers and use the values as indexes against the

sentence_listlist of words:

for s in sentences_index:

print(sentence_list[s])

- The loop above should return “great” and “article”.

This is pretty much what the function below does, except that we’re also using some f-strings to format the output, and that we won’t be returning any result if the word that we picked is either the first or the last token within the corpus.

def getContext(text,word,howmany=5):

i = howmany

tokens = getFromTxt(text)

indexes = [i for i,w in enumerate(tokens) if w.lower() == word.lower()]

print(f"\nFound {len(indexes)} instances for the word {word}")

choice = input("\nDisplay the context tokens for the word {word} [y/n]?\n")

if choice.lower() == "y":

for header,context in enumerate(indexes):

x = f"Entry {header+1} for the word {word}:"

y = f"\n\t{' '.join(tokens[context-i:context+i])}"

if len(y) < 3:

print(x,"\n\t(Not enough contextual words)")

else:

print(x,y)

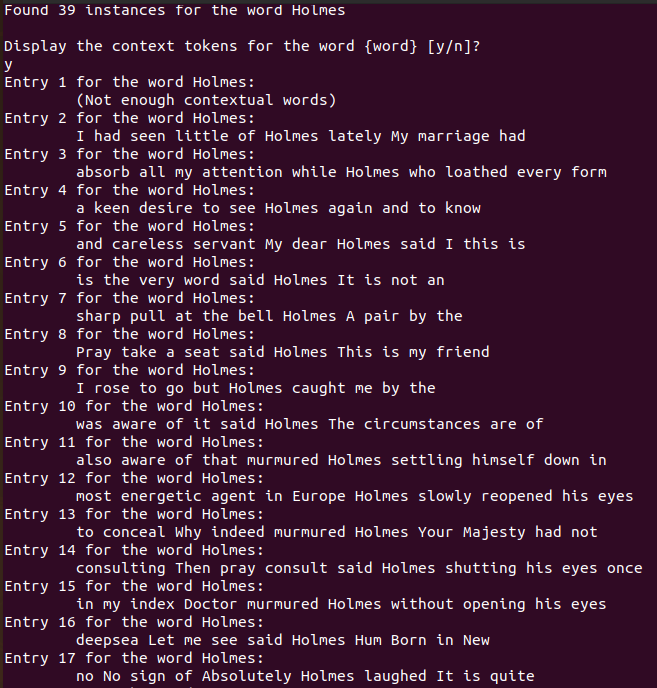

Using Sir Arthur Conan Doyle’s The Hound of the Baskervilles and the word “Holmes”, these are the first lines that our script should hopefully return:

Basic analytics

Another useful feature that we can implement, is some very high-level form of text analysis. We don’t need the program to output anything fancy, and back in 2008 I would have been more than happy to see the number of times the word I had picked was present within the corpus (both in the form of total volume and percentage), as well as some suggestions as to what other words within the corpus might be worth investigating.

To do so, we can just call our getFromTxt() function once again, pick a word, and:

- Count the number of instances of

wordwithin the corpus, using theCounter()method. - Use this number against the total length of the corpus to output a percentage.

- Use the

Counter()function again to find the 10 most common keywords within the corpus. Ideally, we should remove stopwords before doing this (but we won’t).

def getSummary(text,word):

tokens = getFromTxt(text)

total = f"\nCorpus length: {len(tokens)}"

token = [v for k,v in Counter(tokens).most_common() if k.lower() == word.lower()]

perc = round(token[0] / len(tokens) * 100,2)

perc = f"\nRatio: {perc}%"

token = f"\nNumber of iterations for the word '{word}': {token[0]}"

token_len = (int(len(token)/2))

menu = str("\n" + "-" * token_len + " SUMMARY " + "-" * token_len + "\n")

menu2 = str("\n\n" + "-" * token_len + "TOP WORDS" + "-" * token_len + "\n\n")

print(menu,total,token,perc,menu2,"\nOther words of interest:\n")

tokens = [t for t in tokens if len(t) > 4 and t.lower() != word.lower()]

for k,v in Counter(tokens).most_common(10):

print(f"{k:<17} > {v:>5}")

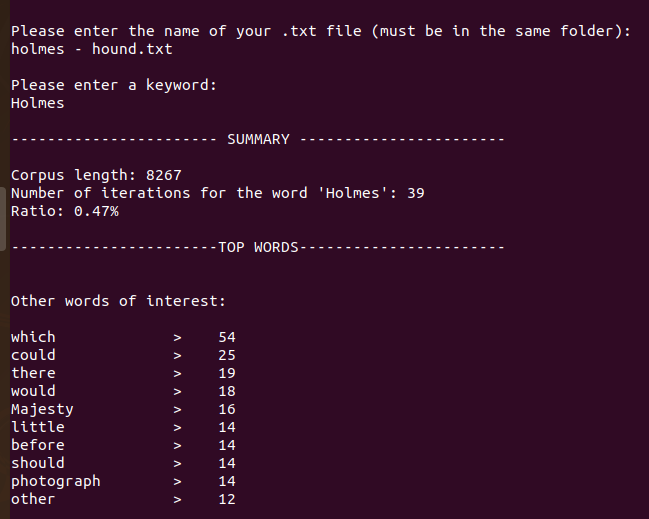

Let’s try to run this function with the word “Holmes” again:

We need a menu

We’re almost there!

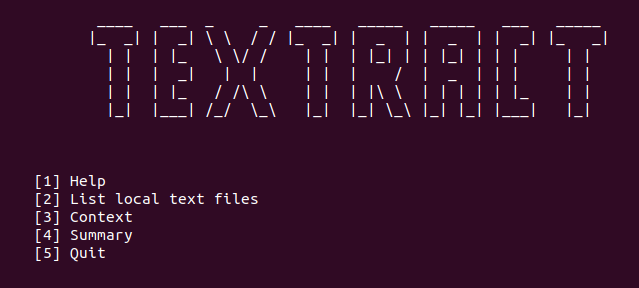

When running the TexTract.py script, our users will be met with a simple menu, through which they will be prompted to choose what they want to do next.

The getMenu() function doesn’t take any parameter, but simply uses a while loop to print a logo (yes, we have a logo!) and a menu. What happens next is quite self-explanatory, and if they choose option 3 (the extraction of contextual words) users will be asked to enter the name of a file in .txt format and to pick a word. If the file doesn’t exist, the script will print an error message and loop back to the main menu.

def getMenu():

main_menu = "\n\t[1] Help\n\t[2] List local text files\n\t[3] Context\n\t[4] Summary\n\t[5] Quit\n\n"

while True:

print(getLogo())

choice = int(input(main_menu))

if choice == 1:

getHelp()

elif choice == 2:

getFiles()

elif choice == 3:

input_text = input("\nPlease enter the name of your .txt file (must be in the same folder):\n")

input_token = input("\nPlease enter a keyword:\n")

try:

getContext(input_text,input_token)

except:

print(f"\nSorry, there doesn't seem to be a file with the name {input_text}")

elif choice ==4:

input_text = input("\nPlease enter the name of your .txt file (must be in the same folder):\n")

input_token = input("\nPlease enter a keyword:\n")

try:

getSummary(input_text,input_token)

except:

print(f"\nSorry, there doesn't seem to be a file with the name {input_text}\n")

elif choice ==5:

print("\nThe application will now quit, thank you.\n")

break

else:

print("Wrong input")

If we call the getMenu() function, the text below should appear on the screen:

… And a logo!

As you have probably noticed from the screenshot above, the first line of code inside the while loop calls a function named getLogo(). This is just a simple string, and it was actually pretty fun to write.

def getLogo():

logo = ("""

____ ___ _ _ ____ _____ _____ ___ _____

|_ _| | _| \ \ / / |_ _| | _ | | _ | | _| |_ _|

| | | |_ \ \/ / | | | |_| | | |_| | | | | |

| | | _| | | | | | / | _ | | | | |

| | | |_ / /\ \ | | | |\ \ | | | | | |_ | |

|_| |___| /_/ \_\ |_| |_| \_\ |_| |_| |___| |_|

""")

return logo

Putting everything together

Well, all we have to do now is place all the functions we just wrote inside a class named texTract, making sure we add self as a parameter to all our functions, and self. when calling functions within other functions.

Before saving our work as a .py file, we need to add a last few lines to our script before we can run it:

if __name__ == "__main__":

textract = texTract()

textract.getMenu()

You can find the final version of the code below. I thoroughly enjoyed writing this article, and I hope you found it entertaining too!

import os

import sys

import re

from collections import Counter

class texTract:

def getHelp(self):

print("Mot available yet.")

def getFromString(self,input_text):

if self.Format == 1:

tokens = [re.sub(r"[^\w\s]","", t) for t in input_text.split(" ") if t != "\n"]

tokens = [t for t in tokens if len(t) > 0]

return tokens

def getFromTxt(self,input_text):

tokens = []

with open(input_text, "r") as file:

for f in file:

for token in f.split(" "):

if "\n" not in token:

tokens.append(re.sub(r"[^\w\s]","", token))

return tokens

def getFiles(self):

ind = 0

print(f"\nThe following file(s) were found:\n")

for f in os.listdir():

if f.endswith(".txt"):

ind+=1

print(f"\t{[ind]} {f}")

def getContext(self,text,word,howmany=5):

i = howmany

tokens = self.getFromTxt(text)

indexes = [i for i,w in enumerate(tokens) if w.lower() == word.lower()]

print(f"\nFound {len(indexes)} instances for the word {word}")

choice = input("\nDisplay the context tokens for the word {word} [y/n]?\n")

if choice.lower() == "y":

for header,context in enumerate(indexes):

x = f"Entry {header+1} for the word {word}:"

y = f"\n\t{' '.join(tokens[context-i:context+i])}"

if len(y) < 3:

print(x,"\n\t(Not enough contextual words)")

else:

print(x,y)

def getSummary(self,text,word):

tokens = self.getFromTxt(text)

total = f"\nCorpus length: {len(tokens)}"

token = [v for k,v in Counter(tokens).most_common() if k.lower() == word.lower()]

perc = round(token[0] / len(tokens) * 100,2)

perc = f"\nRatio: {perc}%"

token = f"\nNumber of iterations for the word '{word}': {token[0]}"

token_len = (int(len(token)/2))

menu = str("\n" + "-" * token_len + " SUMMARY " + "-" * token_len + "\n")

menu2 = str("\n\n" + "-" * token_len + "TOP WORDS" + "-" * token_len + "\n\n")

print(menu,total,token,perc,menu2,"\nOther words of interest:\n")

tokens = [t for t in tokens if len(t) > 4 and t.lower() != word.lower()]

for k,v in Counter(tokens).most_common(10):

print(f"{k:<17} > {v:>5}")

def getMenu(self):

main_menu = "\n\t[1] Help\n\t[2] List local text files\n\t[3] Context\n\t[4] Summary\n\t[5] Quit\n\n"

while True:

print(self.getLogo())

choice = int(input(main_menu))

if choice == 1:

self.getHelp()

elif choice == 2:

self.getFiles()

elif choice == 3:

input_text = input("\nPlease enter the name of your .txt file (must be in the same folder):\n")

input_token = input("\nPlease enter a keyword:\n")

try:

self.getContext(input_text,input_token)

except:

print(f"\nSorry, there doesn't seem to be a file with the name {input_text}")

elif choice ==4:

input_text = input("\nPlease enter the name of your .txt file (must be in the same folder):\n")

input_token = input("\nPlease enter a keyword:\n")

try:

self.getSummary(input_text,input_token)

except:

print(f"\nSorry, there doesn't seem to be a file with the name {input_text}\n")

elif choice ==5:

print("\nThe application will now quit, thank you.\n")

break

else:

print("Wrong input")

def getLogo(self):

logo = ("""

____ ___ _ _ ____ _____ _____ ___ _____

|_ _| | _| \ \ / / |_ _| | _ | | _ | | _| |_ _|

| | | |_ \ \/ / | | | |_| | | |_| | | | | |

| | | _| | | | | | / | _ | | | | |

| | | |_ / /\ \ | | | |\ \ | | | | | |_ | |

|_| |___| /_/ \_\ |_| |_| \_\ |_| |_| |___| |_|

""")

return logo

if __name__ == "__main__":

textract = texTract()

textract.getMenu()