Save a Pandas Dataframe as a SQL Table Using SQLAlchemy

There are many reasons why we might want to save tabular data into a SQL database rather than simply outputting Pandas dataframes into .csv files.

We might, for instance, have an automated script that runs daily and extracts a certain amount of tweets using the Tweepy library. The script could be pulling tweets from different users, or use different hashtags and / or search terms. This would be best saved into separate tables, gathered within a single database.

For this article though we’ll only be using a very simple HTML table that is sitting on the TIOBE Index’s website, to show how straightforward it it to use SQLAlchemy.

If you have never heard of the TIOBE Index, here’s what the official website can tell us about it:

The TIOBE Programming Community index is an indicator of the popularity of programming languages. The index is updated once a month. The ratings are based on the number of skilled engineers world-wide, courses and third party vendors.

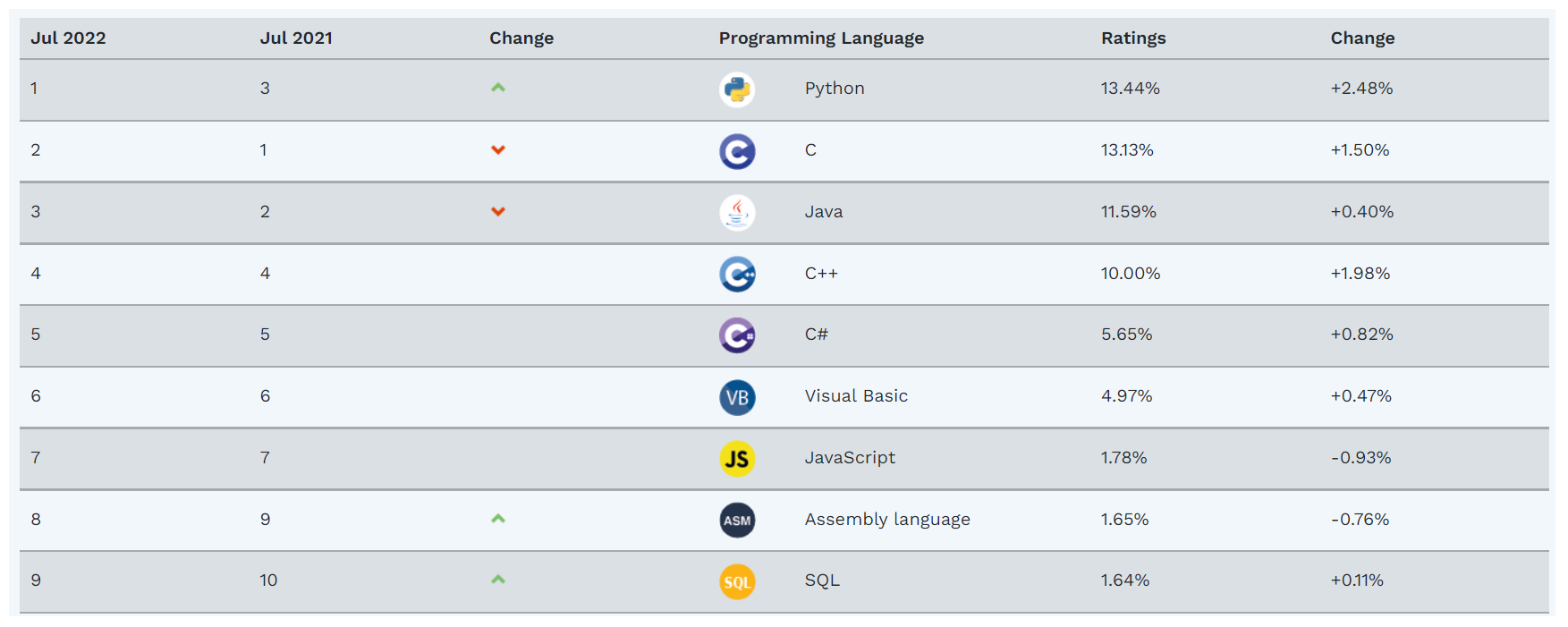

And this is what, as of September 2021 (Updated: July 2022), the table looks like:

The data

Now that we know what we’ll be working with, we can start by importing the necessary libraries:

import pandas as pd

import sqlalchemy

from sqlalchemy import create_engine

Next, we can write a simple function that passes an HTML table into a Pandas dataframe object. The reason why we are adding a zero index [0] at the end of line 2, is that the read_html method from Pandas will output all the existing tables within a given HTML page. If there were 5 tables within the HTML page (which is quite common in Wikipedia articles for instance), and we wanted the third table, we would select it by adding in [2] after the read_html method. Now, there is only one table on this page, but we still need to select it by appending a zero index to the end of line 2.

def getDataFrame(url_address,ind):

df = pd.read_html(url_address, index_col=None)[ind]

return df

df = getDataFrame("https://www.tiobe.com/tiobe-index/",0)

Let’s see what the output looks like.

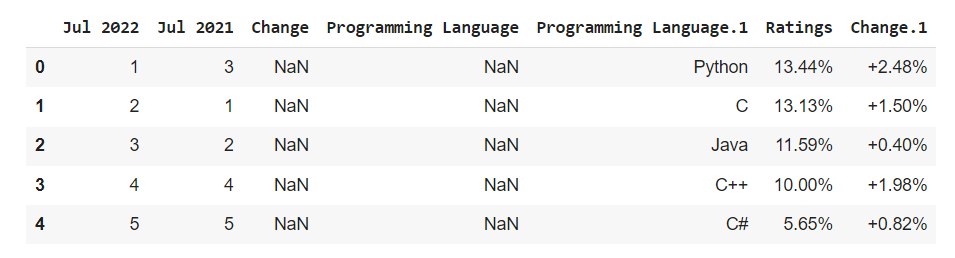

Ok, we now have a dataframe, but series 3 and 4 only contain NULL values, and we have a dot in the middle of the 5th serie. Let’s see how we can fix this. First, we’re going to loop through the name of the series, using the .columns argument, and pass these string into a list comprehension (line 2). We are ready to rename the 5th serie by passing cols[4] through Pandas’ .rename() method, before dropping cols[2] and cols[3] altogether.

def quickClean(data):

cols = [col for col in data.columns]

data.rename(columns={f"{cols[4]}" : "Language"}, inplace=True)

data.drop(columns=[cols[2],cols[3]], inplace=True)

return data

df = quickClean(df)

df.head()

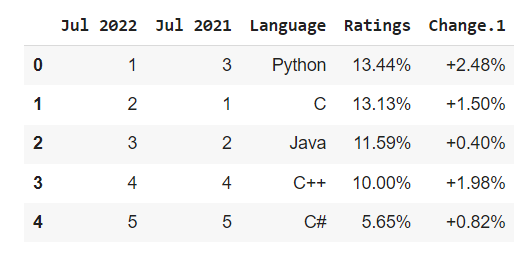

When we apply this function to our dataframe, we now get the following result:

That’s much better!

Creating engines

We’re ready to save this dataframe as a SQL table. To do so, we’re going to name this dataframe ‘tiobe’, which will sit within a database that we’re going to call ‘languages’. The next steps are, again, fairly simple:

- The first thing we need to to, is to use SQLAlchemy’s

.create_engine()method and select both a SQL dialect (here, SQLite) and a pool (roughly speaking, the name of your database). You can find more about the concept of engines directly on the SQLAlchemy website. - We can then use Pandas’

.to_sql()method, specifying the name of the database that the table will be stored into, and the name of the table. We’re also telling Pandas not to add an index, and to replace any existing data. If we wanted to insert new values into this table without deleting the existing ones, we would pass append as an argument instead.

def getDataBase(db_name_in,db_name_out,dataframe):

engine = create_engine(f"sqlite:///{db_name_in}.db")

dataframe.to_sql(db_name_out, engine, index=False, if_exists="replace")

return engine

engine = getDataBase("languages","tiobe",df)

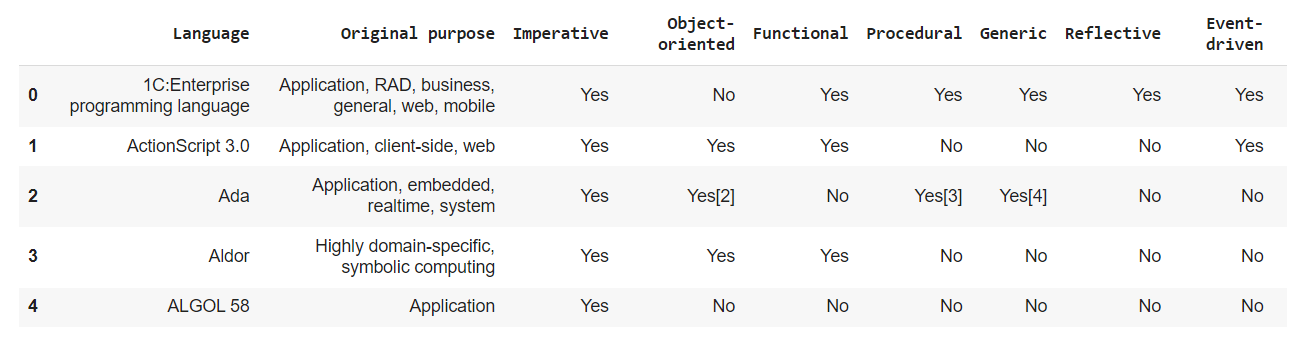

You might wonder at this stage why we are not simply saving our dataframe as a .csv file. Well, imagine that we have another dataframe, that we can combine with our first datafrane, using a JOIN statement. Wikipedia has this list of programming languages that we can scrape and output as a second dataframe:

df2 = getDataFrame("https://en.wikipedia.org/wiki/Comparison_of_programming_languages",1)

df2.head()

Let’s save this new dataframe within our languages database, this time naming it wiki.

engine = getDataBase("languages","wiki",df2)

We can now use SQL syntax within Pandas’ read_sql() method to JOIN our two tables:

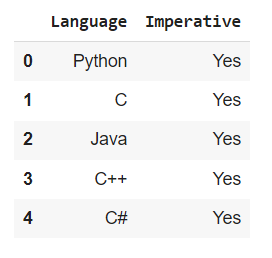

pd.read_sql(

"""

SELECT

t.Language,

w.Imperative

FROM

tiobe AS t

LEFT JOIN wiki AS w ON t.Language = w.Language

LIMIT 5

""",

engine

)

And this is what we obtain:

The code above only shows a very small part of all that can be done with the SQLAlchemy library, and I highly encourage you to read their online documentation