POS Tagging and Named Entities Recognition Using SpaCy

I have often found that one of the easiest and most effective ways to approach short textual data like comments or tweets, is to try and discover high-level patterns and visualise them. Topic modelling requires a bit of trial and error, while looking for recurring contextual words might be more suited for larger chunks of unstructured data such as blog articles or novels.

So, most of the times, I start by peforming the following two tasks:

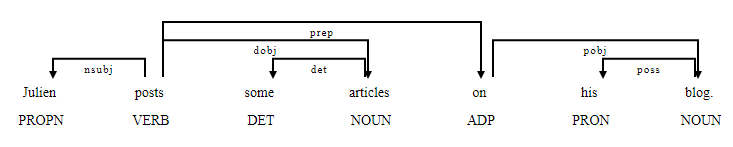

- Part-of-speech tagging: The objective here is to extract and categorise the grammatical function of each word within a sentence. Basically, we’re assigning a grammatical tag to the words in a corpus, as shown in the example below (we’ll see further down this article how to create this type of plot):

- Named Entities Recognition: The purpose of NER is to retrieve from a corpus real-world objects that have a name. Each named entity is then classified, based on a set of predefined categories.

For instance, in the sentence “Julien lives near UCD in Dublin, Ireland”, the following objects would be extracted and classified as follows:

| Entity | Description |

|---|---|

| “UCD” | ORG (companies, agencies, institutions) |

| “Dublin” | GPE (countries, cities, states) |

| “Ireland” | GPE (countries, cities, states) |

The data

There are several Python libraries that can be used to extract this type of information, such as NLTK or TextBlob. However I have found myself using spaCy more frequently than any other NLP library over the past couple of years. It’s lightning fast, and its community is pretty helpful.

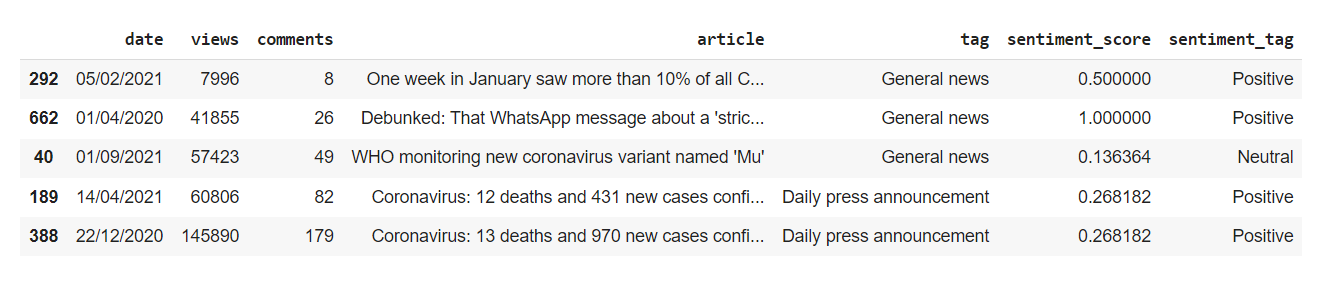

I just scraped a bunch of Covid19 related article from The Journal.ie, one of the most popular Irish news sites. You can find the full dataset on my GitHub page.

import pandas as pd

def getDataframe(data):

df = pd.read_csv(data)

return df

df = getDataframe("https://raw.githubusercontent.com/julien-blanchard/dbs/main/df_journal.csv")

df.sample(5)

This is what our dataframe looks like. We can pretty much ignore all the series except df["article"], which basically refers to the title of each article that was extracted from the news site.

df = df.filter(["article"])

To work with spaCy, not only do we have to import the library, but we also have to load a pre-trained pipeline model. In this case, we’ll be using the "en_core_web_sm" pipeline, which is specific to the English language. You can learn more about core pipelines here.

import spacy

nlp = spacy.load("en_core_web_sm")

Ok, so how does it work? At a high-level, all we have to do is create an object that we will call doc, and process it through the pipeline that we assigned to the nlp variable. This creates tokens, through which we can loop, and use some of spaCy’s features such as text or _pos to extract our POS tags.

SpaCy’s parser doesn’t rely on a rule-based or grammatical-based method to assign each token a tag, but instead on a probabilistic model that assigns a POS tag based on the probability of the occurrence of a particular sequence of tags. In other words, Spacy uses a pre-trained pipeline to make predictions for which tag or label are most likely to appear in a given context.

Please note that the :<10 arguments I’m using within each f-string are absolutely optional. They just format the results nicely.

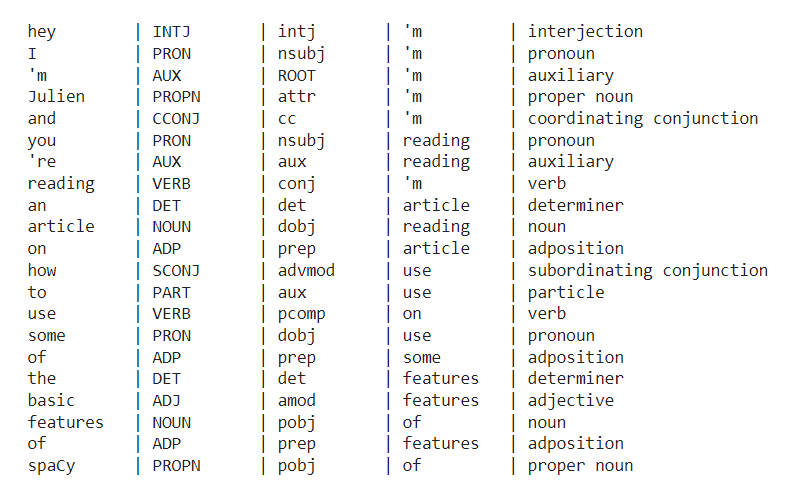

text = "hey I'm Julien and you're reading an article on how to use some of the basic features or spaCy"

doc = nlp(text)

for token in doc:

print(f"{token.text:<10} | {token.pos_:<10} | {token.dep_:<10} | {token.head.text:<10} | {spacy.explain(token.pos_):<10}")

That’s pretty cool right? We have our tokens, their grammatical tags, etc.. Which means we could filter out the tokens by tag type. Say we only want to output the verbs, then we could simply write:

for token in doc:

if token.pos_ == "VERB":

print(f"{token.text:<10} | {token.pos_:<10}")

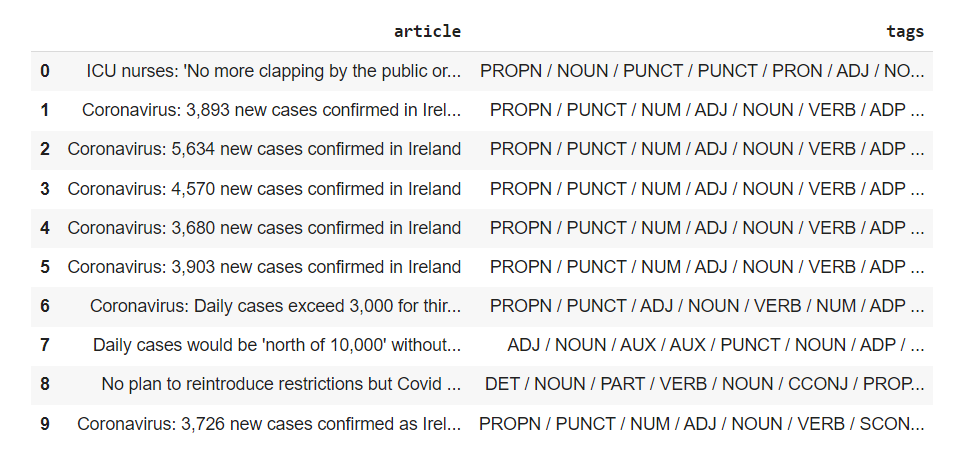

Now, back to our dataframe. To illustrate what we just saw, we can create a new serie, that outputs the corresponding POS tag for each token within the df["article"] serie. Though I can’t really see any practical reason why anybody would need that, here’s what the output would look like:

def getTags(serie):

tokens = nlp(serie)

lex = [t.pos_ for t in tokens]

lex = " ".join(lex)

lex = lex.replace(" ", " / ")

return lex

df["tags"] = df["article"].apply(getTags)

df.head(10)

A more useful application for POS tagging would be to " ".join() all the strings within the df["article"] serie, extract the verbs, and look for the most recurring ones.

def getVerbs(serie,tag):

result = []

doc = nlp(" ".join(serie))

for token in doc:

if token.pos_ == tag:

result.append(token.text.lower())

return result

verbs = getVerbs(df["article"],"VERB")

from collections import Counter

def getCount(data,howmany):

counted = Counter(data)

for k,v in counted.most_common(howmany):

print(f"{k:<15} | {v:>6}")

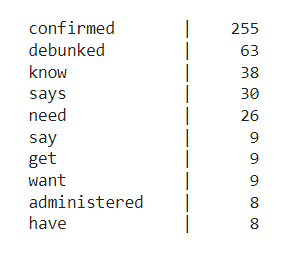

getCount(verbs,10)

And this what we get, quite interesting if you ask me!

Named entities recognition

In a similar fashion, Spacy’s built-in POS parser to extract Names Entities from the exact same dataset. As a result, NER can assist in transforming unstructured data into structured data, as well as provide contextual information regarding words of interest within a corpus.

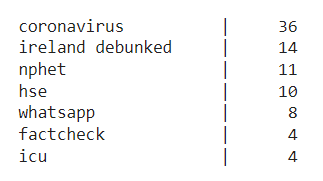

We can try and take a look at the most common PERSON (people, including fictional) and ORG (companies, agencies, institutions), and again aggregate the results.

import spacy

nlp = spacy.load("en_core_web_sm")

text = "hey I'm Julien and you're reading an article on how to use some of the basic features or spaCy"

doc = nlp(text)

for entity in doc.ents:

print(f"{entity.text:<10} | {entity.label_:<10} | {spacy.explain(entity.label_):<10}")

The main change here is that we’re appending the .ents method to our nlp() object.

Let’s see how we can apply this to the news site dataset!

def getEnts(serie,tag):

result = []

doc = nlp(" ".join(serie))

for entity in doc.ents:

if entity.label_ == tag:

result.append(entity.text.lower())

return result

orgs = getEnts(df["article"],"ORG")

That’s it for now, I hope you enjoyed reading this article as much I enjoyed writing it!