The Polars Dataframe Library, but for Ruby

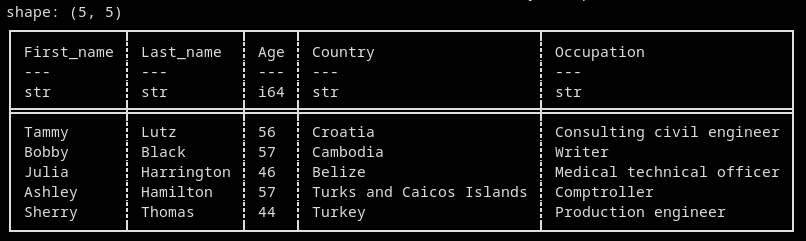

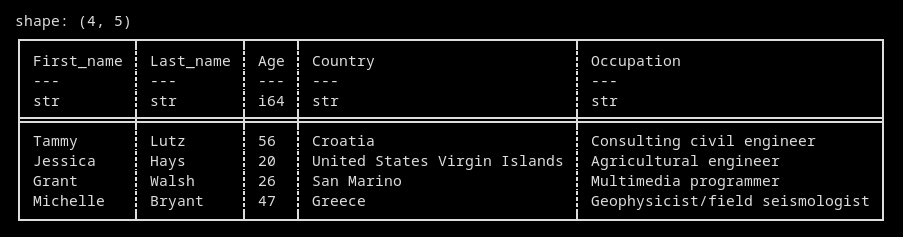

An example of what we’ll be doing in this article

I was reading some random conversation threads on HackerNews the other day when I came across an article which announced that Polars had just been ported to the Ruby programming language.

Now, unless you have been living under a rock for the past year or so, you probably know that Polars is a data manipulation library that was written entirely in Rust. If you’re wondering why this matters at all, it seems that Polars is capable of processing speeds that largely outclass those of most of its direct competitors. Besides, Polars appears to be way more memory-efficient, allowing for the handling of larger datasets. That being said, Pandas 2.0 has just been released and also allows for the use of Apache Arrow columns. Early benchmarks seem to confirm that the overall speed has greatly improved:

Now, we all know that a good data transformation and analysis toolkit isn’t just all about speed. Pandas offers tons and tons of fantastic features, and it integrates nicely with a lot of other useful libraries such as Numpy or your favourite visualisation frameworks. Besides, if speed is an issue when using Pandas or Python in general, my advice would be to rethink your approach first and then ask yourself what you can do to improve your code before reaching a point where you believe that a given programming language really is your bottleneck.

So why am I writing this article anyway? Well what caught my attention was a specific comment on the very same HackerNews thread (see the highlighted text):

For some reason that I can’t explain, I’ve always felt attracted to Ruby. It’s one of these languages, like Lisp-inspired dialects such as Racket or Clojure, that I have always heard fantastic feedback about but have never dared to try. A couple of years ago, I even followed the recommendations of a colleague of mine and purchased this book:

Rather than following that dismissive response, I actually headed the exact opposite direction and asked myself “why not?”. More broadly speaking, why wouldn’t anyobody want to use their favourite programming language for pretty much anything they want to do?

This article is going to show a complete noob’s approach to Ruby, as well as to Polars. I have of course gone past printing “Hello, world!, but not that much further. So as I know close to nothing about Ruby, please reach out to me if you spot anything that you think is wrong and doesn’t make any sense at all!

Life without Python, R, and Julia

If you are regular a reader of this website, you probably know how much I love using JavaScript for anything data related. I wrote last year an article that showcased what could be done with Danfo.js, a recent and yet already fairly comprehensive data wrangling package that works well with TensorFlow.js and offers native support for Plotly.js. I have also used on multiple occasions Arquero, a Dplyr-inspired package for the Node.js environment. Besides, JavaScript users can now access some pretty good Jupyter-like notebook solutions such as Starboard or Polyglot.

I wasn’t sure I would mention ObservableHQ, which is perhaps the most renowned JavaScript-based collaborative notebook environment. Yet, I’ve been quite reluctant to invest some time and effort into a platform whose owners can suddenly decide to lock behind a paywall if they decide to.

More “underground” approaches might include:

-

GNU Octave, a scientific computing language that has been around for a fairly long time, and that I have to admit having zero knowledge of. From what I understand, its syntax resembles Matlab’s

-

Elixir is yet another language that I’ve never had the chance to seriously invest time in but have always found appealing. I recently came across a YouTube channel called TitanTech that has several interesting videos dedicated to using Elixir in the context of data science:

-

Nim, aka THE language that I would love to see grow over the next few years. Nim’s syntax resembles Python’s, its compiler can target C and JavaScript, and the whole language is just a joy to work with. Unfortunately, it doesn’t offer a ton of libraries yet, but fingers crossed for the future.

-

It took me some time to decide as whether or not I would include Wolfram to this list, for the same reasons I was hesitant to mention ObservableHQ earlier. However, it seems to now come bundled with recent versions of Raspberry Pi OS so I guess it can be used for free on some devices and at least deserves an honorable mention.

And going back to today’s article, Ruby of course. But wait, does Ruby even have any data wrangling libraries? I recently stumbled upon a GitHub repository named Ruby for data science that links to various toolkits such as Daru, a dataframe gem that seems to work like Pandas. There is also a bunch of data visualisation libraries there, like non-official ports of Matplotlib or Plotly to name a few, as well as some pretty neat stuff that you should be familiar with if you’re coming from Python or R: NetworkX, XGBoost, etc.. Unfortunately, this nice repo hasn’t been updated since 2017, and most of these gems haven’t had a fresh lick of paint in a while either.

Ankane + data + Ruby = love

Ankane is the nickname of a talented and prolific developer named Andrew Kane. This guy loves Ruby and he has over the past few years developed a ton of really cool gems.

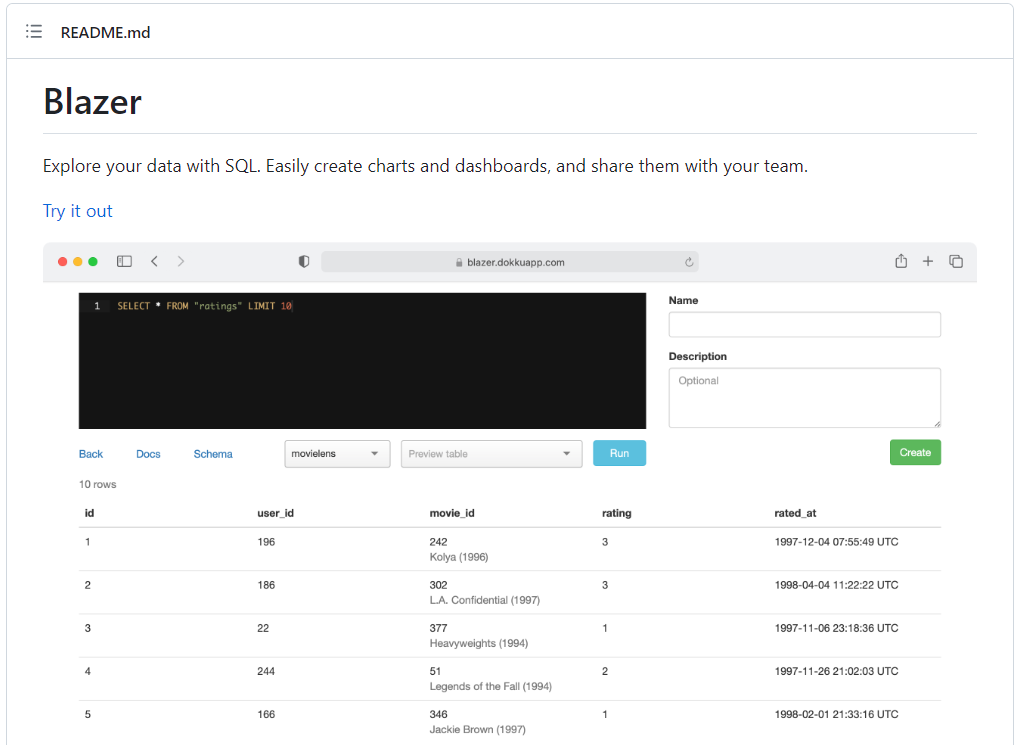

My favorite one is probably Blazer, a comprehensive SQL playground for data analysis that offers a lot of functionalities. I can really see quite a few scenarios where such a tool would be very useful, especially for small to mid size businesses that want an easy-to-navigate solution to explore their data:

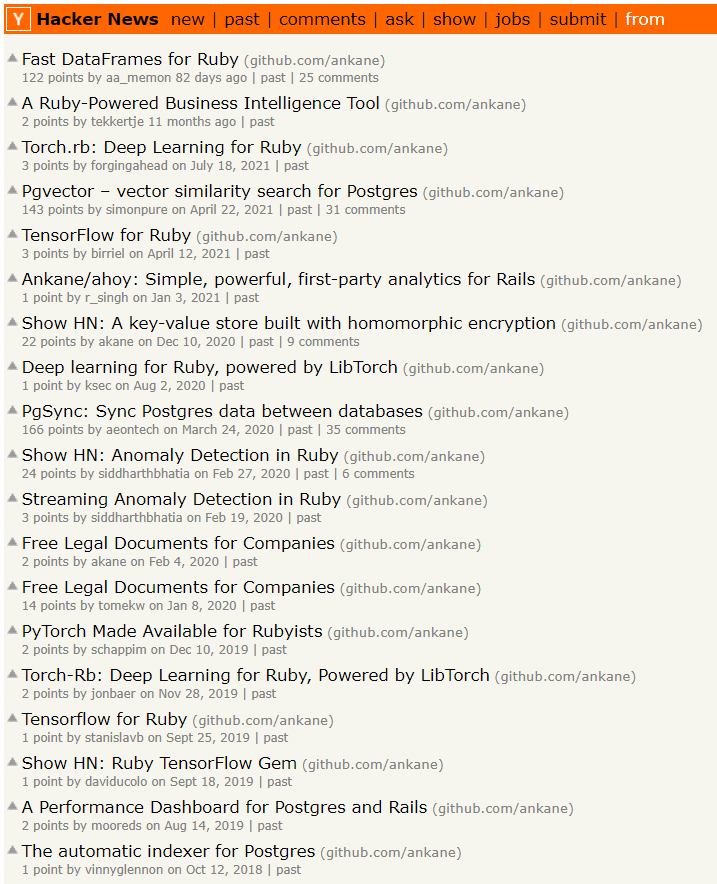

But aside from his own personal projects, he’s become particularly known for porting popular libraries from various languages onto the Ruby ecosystem. And he has literally done this dozens of times. I mean seriously, just take a look at all the cool libraries that he has brought onto Ruby over the past few years:

- TensorFlow

- PyTorch

- Prophet

- Vega

- etc..

His recent contributions, as listed on Hacker News, are simply amazing:

First impressions

On a loosely related note, I recently moved to Manjaro OS which I’ve been really enjoying so far. All the screenshots that you’ll find below were taken while running my scripts through a terminal emulator named Kitty.

Alright, what we need first is a dataset! I have uploaded quite a few onto my personal GitHub repository over the past few years,and for today’s article we’re going to use a set of fake names and jobs that I created a while ago when writing an article dedicated to a JavaScript library named Arquero:

wget https://raw.githubusercontent.com/julien-blanchard/datasets/main/fake_csv.csv

The csv file has been saved locally, and we can run a simple one line long bash command to get an overview of the dataset:

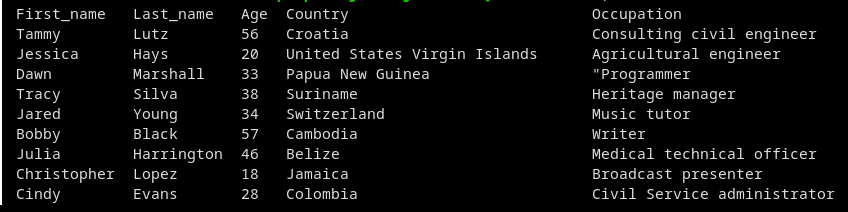

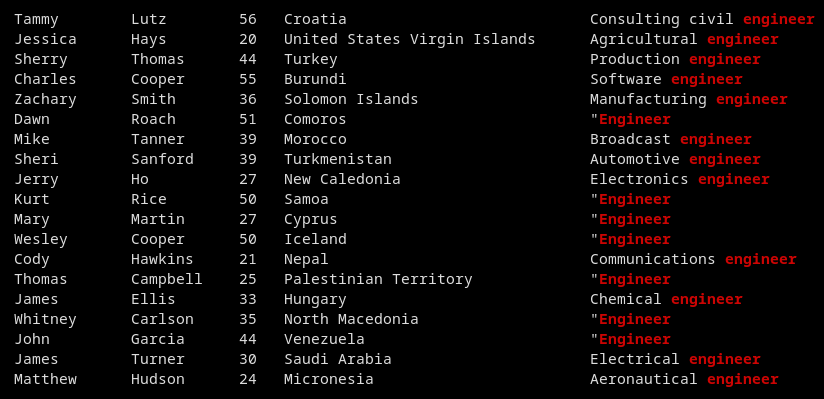

column -s"," -t fake_csv.csv | head

At this point, we could of course add some further arguments, using Grep for instance, but we’d be still pretty limited. Anyway, let’s retrieve all the users who might have a cool job:

column -s"," -t fake_csv.csv | grep -i engineer

Instead, let’s install the Ruby gem for Polars:

gem install polars-df

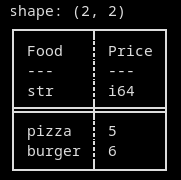

Loading a dataset onto Polars is pretty straightforward. All we have to do is pass a hash, aka a dictionary if you’re coming from Python, into Polars’s .new() method:

require "polars-df"

food = {

Food: ["pizza","burger"],

Price: [5,6]

}

def getDataFrame(data)

dframe = Polars::DataFrame.new(data)

return dframe

end

df = getDataFrame(food)

puts df.head(10)

And we get something similar to what we obtained earlier using the columns command, except that our data seems now surrounded by this nice frame, like in a, wait.. yes! A dataframe!

Now that’s all great, but arguably not very useful to us as we’re working with a csv file. Well the good news is, Polars offers a wide range of methods to work with the most popular file formats. As a matter of fact, you’ll be happy to know that parquet files are also supported, and that we could also query a SQL database if we wanted to. For now, let’s write a simple function that takes a file as an argument and returns a dataframe:

def getDataFrame(csv_file)

dframe = Polars.read_csv(csv_file)

return dframe

end

df = getDataFrame("fake_csv.csv")

puts df.head

You’ll hopefully be happy to know that Polars also supports loading the following file formats:

- JSON (which will be very useful when requesting data from a REST API)

- Parquet

- Active Record (I must say that I’m not too familiar with this)

- Feather / Arrow IPC

- Avro (again, never seen this file format in my life)

This is awesome!

So far, so good! Before writing this article, I was able to get pretty much anything I tried to work. I played with Polars for Ruby for an entire weekend, and I must say that I had some great fun. Despite my obvious lack of knowledge around both the library and the overall language, I managed to load data from various sources and build a couple of simplistic but fun apps.

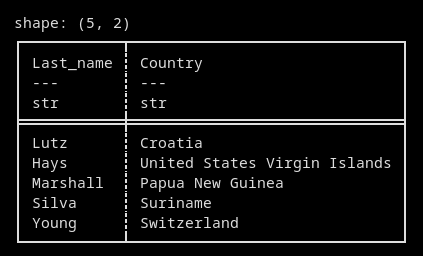

Basic data manipulation, such as selecting one or several specific columns, I found especially intuitive:

cols = df[ ["Last_name","Country"] ]

puts cols.head

Same for filtering. Operators like and or xor are of course supported and can even be combined:

filtered = df[(Polars.col("Age") > 43) & (Polars.col("Country") == "Croatia")]

puts filtered.head

One of the first things that I wanted to try was how to perform some basic aggregation, To do this, we can simply use the .groupby() method and add any summary statistics operator that we can think of (in this case count). What I really enjoyed as well, is how Ruby makes using method chaining easy to both write and read:

def getGroupBy(data,serie)

grouped = data

.groupby(serie)

.count

return grouped

end

df = getDataFrame("fake_csv.csv")

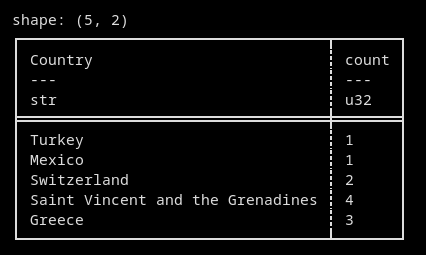

group = getGroupBy(df,"Country")

puts group.head

Actually, one of the only issues that I encountered, is that I was unable to sort the results of my queries:

def getGroupBy(data,serie)

grouped = data

.groupby(serie)

.count

.sort(["count"])

return grouped

end

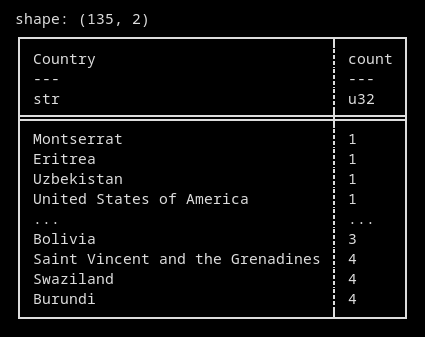

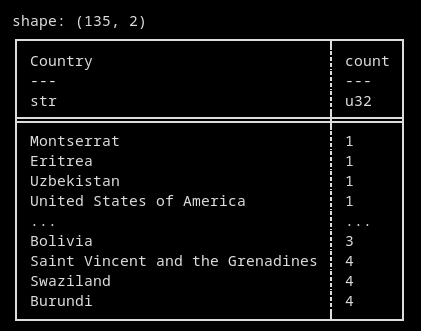

df = getDataFrame("fake_csv.csv")

group = getGroupBy(df,"Country")

puts group

As you can see in the example above, the .sort() method is supported, but I kept getting errors when passing the following arguments:

.sort(["count"], ascending=false).sort(["count"], {ascending: false} )

That’s only a minor issue though, and it’s likely me who wasn’t able to figure out how to sort the output of my queries, rather than Polars not allowing for it.

Oh, I almost forgot: combining dataframes using JOIN and UNION-like methods is super easy:

left = df[ ["Last_name","Country"] ]

right = df[ ["Last_name","Age"] ]

combined = left.join(right, on: "Last_name", how: "left")

puts combined.head

top = df.head(2)

bottom = df.tail(2)

combined = top.vstack(bottom)

puts combined

Please note that at the bottom of his GitHub repository, Andrew Kane also shows how to create simple visualisations using his own port of Vega-lite. That being said, I feel that this article is already way too long for its own good, so we won’t explore charting libraries for Ruby for now.

I had always wanted to try Ruby

Right, here’s my humble two-cents: playing around with Polars for Ruby over the course of a weekend has been a ton of fun! Of course, I know, just like working with Pandas doesn’t really fully expose you to Python, performing some basic data manipulation with the Polar gem didn’t show me much of what Ruby is all about.

What it did though, is make me want to look for more and get me out of my comfort zone. I for instance found some pretty interesting gems, such as stanford-core-nlp and some of the packages listed on the Ruby Natural Language Processing Resources page that I really want to start exploring. My go-to languages for this type of work have always been Python and JavaScript, and I’m now curious to see if some of these gems might lead me to discover concepts or approaches that I haven’t had the chance to be exposed to yet.

Should Ruby become your default language for data science? Probably not. But if you’re looking for something fun to learn, you now know where to start!