Evaluate Topics Coherence With Palmetto

Over the last couple of years, I have been dabbling a bit with Topic modelling, and to this day I still find this niche subset of computational linguistics to be quite fascinating. Some very interesting research papers have been published over the past decade, and recent forays into the field of natural language processing such as transformers, combined with the development of some new libraries, have certainly brought a welcome breath of fresh air to that field.

Though I had some great time fitting various models on a wide range of datasets (including news articles, tweets, entire novels, etc..), one issue I systematically encountered was that I struggled to find a strong and easy to scale evaluation framework. And what proved most challenging, was to trust a set of statistical evaluation tools and metrics that I would favour over human evaluation.

I do believe that there are cases where human evaluation can be shown to add value, by bringing in some contextual knowledge for instance. This is true for situations where not all the data that a model would normally require to output accurate results is actually present within the dataset. Specifically, an instance of complex disambiguation will be presented, highlighting the added layers of complexity that some words can introduce, either when they’re acronyms or terms whose local meaning can’t be found within any pre-trained dictionary.

I challenge anybody to show me a pre-trained model that can output a high coherence score for [“GAA”, "AIB", "hurling"]:

Reliable evaluation metric wanted urgently

Typically, in machine learning, the performance of a given model is evaluated by running the said model on a subset of the dataset called the training set, and to assess the performance of the model by running it on the validation dataset. In unsupervised learning though, and more specifically in clustering, the norm is to rely on a combination of very specific approaches such as elbow and knee plots, silhouettes scores, and observations of the mean and variance of the features within each cluster.

The reason why I’m mentioning clustering is that in many aspects, Topic modelling very much resembles your typical clustering model. Ultimately, the main goal is mostly to try and discover underlying patterns in data and organise them into buckets, or clusters.

But just like coming up with accurate metrics for classical clustering can prove challenging, it is still complicated to evaluate how related the main words within a topic are.

Hello Palmetto

One of the main evaluation frameworks that regularly pops up if you look for word to word coherence metric is a tool called Palmetto. In their research paper, its authors explained how they explored no less than 200,000 coherences to build their library before reducing them to a final number of six that provide the best coherence calculations for the most prominent words within a given topic. These coherences are based on word co-occurrences and were extracted from an English wikipedia corpus, before being evaluated against human ratings.

The following six coherences are listed as:

- C_A

- C_V

- C_P

- C_NPMI

- C_UCI

- C_UMass

I won’t get into greater details about these coherences, mainly because I don’t fully understand how they work. But also because the folks at Palmetto have created this amazing website that allows anybody to enter a list of comma separated words and output their score using any of the coherence models listed above.

Some of these names should already sound familiar to you anyway if you have ever worked with the Gensim library in Python.

According to its creators, the coherence metrics provided by Palmetto “outperform existing measures with respect to correlation to human ratings” [1]. In this article, we’ll be mainly using the “C_V” model, which is “based on a sliding window, a one-preceding segmentation of the top words and the confirmation measure of Fitelson’s coherence".

You can learn more about the coherences here.

Introducing Palmettopy

You might be wondering at this point how we can use any of the aforementioned scores in a production environment. The good news is, you can easily install the Python palmettopy library and start messing around with some of the coherence models.

pip install palmettopy

And then just import the library as follows:

from palmettopy.palmetto import Palmetto

To start evaluating a list of words and output their coherence, we must first pass a url as an argument into the main Palmetto function. The url sometimes changes, and if it happens you can raise a bug on the official Github repo (which I did a few months ago).

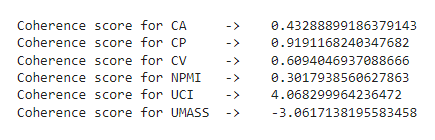

The script below shows how to loop through the aforementioned 6 coherences and have them evaluate a list of related words.

def getPalmettoScore(tokens):

palmetto = Palmetto("http://palmetto.cs.upb.de:8080/service/")

coherences = ["ca", "cp", "cv", "npmi", "uci", "umass"]

for c in coherences:

print(f"Coherence score for {c.upper():<7}->\t", palmetto.get_coherence(tokens, coherence_type=c))

words = ["mango", "apple", "banana", "cherry", "peach"]

getPalmettoScore(words)

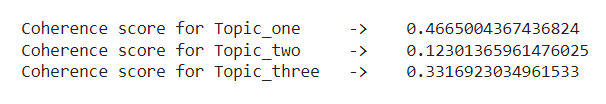

Back to Topic modelling, a practical application of the Palmetto framework would be to gather the words that make each topic, and evaluate their coherence as follows, this time using the "c_v" score only:

sample_topics = {

"Topic_one": ["mango", "apple", "banana", "cherry"],

"Topic_two": ["football","bird","nose","sweater"],

"Topic_three": ["cat","lion","feline","panther"]

}

def getCoherenceScores(topics):

palmetto = Palmetto("http://palmetto.cs.upb.de:8080/service/")

for k,v in topics.items():

print(f"Coherence score for {k:<13} ->\t{palmetto.get_coherence(v, coherence_type='ca')}")

getCoherenceScores(sample_topics)

The second topic, whose words are not related, shows a lower score than the two other topic. That’s some good news!

What isn’t that great though, is the fact that palmettopy is extremely slow, and might not be very suited to processing large corpora.

Gensim to the rescue?

Luckily enough for those that may want to use these coherence measures at a larger scale, the Gensim library offers its own implementation of the Palmetto topic coherence framework. Topic coherence was implemented in Gensim in 2019, and aims at showing which topic model prevails over the others, as a score is attributed for each topic. The Topic Coherence framework also provides a feature that plots the score for the topic models that were output.

So, all good then! Well, it’s a bit more complicated than that. But let’s take a look at how things work first:

from gensim.test.utils import common_corpus, common_dictionary

from gensim.corpora import Dictionary

from gensim.models.coherencemodel import CoherenceModel

sample = [

["cat","tiger","lion"],

["apple","banana","strawberry"]

]

def getPalmettoScore(topics):

# dictionary = Dictionary(topics)

# corpus = [dictionary.doc2bow(t) for t in topics]

cm = CoherenceModel(

topics=topics,

corpus=common_corpus,

dictionary=common_dictionary,

coherence="u_mass")

score = cm.get_coherence()

print(score)

getPalmettoScore(sample)

The script should run and output a score. But you might get the following error might be a list of token ids already, but let's verify all in dict. One tweak is to add two new lines in your function, as shown in this very informative notebook:

dictionary = Dictionary(topics)

corpus = [dictionary.doc2bow(t) for t in topics]

Then simply reassign your corpus and dictionary to the new variables that you just created. If you continue having this issue, make sure that you are using the latest version of Gensim by typing print(gensim.__version__).

But by the way, why did we get this error in the first place? Well that, my friends, is unfortunately the elephant in the roon. If Gensim encounters a word that isn’t present in its pre-trained corpus, it will lead to an error. This issue has been raised multiple times and now seems fixed. But the underlying problem remains: Gensim won’t evaluate the coherence of words that aren’t present in its Dictionary and just skip them.

What’s next

Fortunately, we can still train our own indexes if we want to (and have sufficient data to do so).

As the Palmetto coherence score used above, or NLTK’s Synset score were both trained on large but generic datasets (Wikipedia and Princeton’s Wordnet respectively), these metrics will likely struggle to output accurate results when fitted to more specific datasets.

Palmetto offers this very comprehensive guide which describes all the necessary steps to follow to train a new index on the reference corpus of your choice. This isn’t something that I have had the chance to try yet, but if you have, please reach out to me and let me know how it went for you!