Dictionary APIs for the Enthusiastic Linguist: An Overview

An example of what we’ll be doing in this article

For anybody who’s ever worked with textual data, the past 4 or 5 years have been an absolute blast. Since the publication of Attention Is All You Need in 2017, the field of natural language processing has seen new frameworks, libraries, and concepts coming up on a regular basis.

Take sentiment analysis for instance. For years, available solutions were limited to rule-based models like VADER or AFINN. And don’t get me wrong, I’ve always had great results using either if these two models. But we now have access to even more comprehensive and robust solutions, like HugginFace’s RoBERTa which if I remember correctly was trained on 58+ million tweets. And as if that just wasn’t enough, we can now even build our own sentiment analyzer and train it on whichever dataset that we want to!

All these packages, all these models, are just a few pip install away from our favourite IDE. And this is great. It truly is. But as a hobbyist programmer, we sometimes want to experiment and build things ourselves. Nothing beats the feeling of satisfaction that comes from starting a project from scratch and writing some obscure package that nobody will ever use but that we will thoroughly enjoy spending all our free time developing.

From term to data

Let’s imagine a scenario where, instead of relying on NLTK or spaCy’s part Part-Of-Speech taggers, we decided to create our own. Where would we even start? Well most likely, we’d have to follow one of the following two options:

- We could try and gather some txt files that contain key / value pairs for common terms and their tag. Such files exist on GitHub for instance, and can be easily accessed alongside a bunch of other useful stuff like lists of common stopwords for each and every possible language.

- We could try and scrape data from an online dictionary website such as Collins or Merriam-Webster, as their search result pages usually contain all the information that we’re lookimg for: definition(s), POS tag(s), example(s), etc..

But an even better approach would be to use an API. If you’re not familiar with what an Application Programming Interface is, you’ll find plenty of documentation online. Long story short, we’re talking about a program that enables two computers to talk to each other and exchange some information. If you want to know more, the following video is a good place to start:

In today’s article, we’ll be specifically focusing on the following three NLP-oriented APIs:

If you don’t mind slightly opinionated takes, we’ll also quickly discuss why you should stay away from WordsAPI, and from any of RapidAPI’s products in general.

But before we get there, let’s try and see how the second option we listed earlier, aka scraping a website, would work.

Why use an API?

[ Disclaimer: the code snippets that you will find in this article are pretty bad, and can be easily optimized. For one, we should avoid nested loops at all cost when traversing any data type in Python. However, I wanted to focus on readability for this article, hence the multiple loops and f-strings that you are about to see. ]

Actually, that’s a good question, why should we have to use an API? After all, we could scrape the data ourselves, couldn’t we?

Let’s see how!

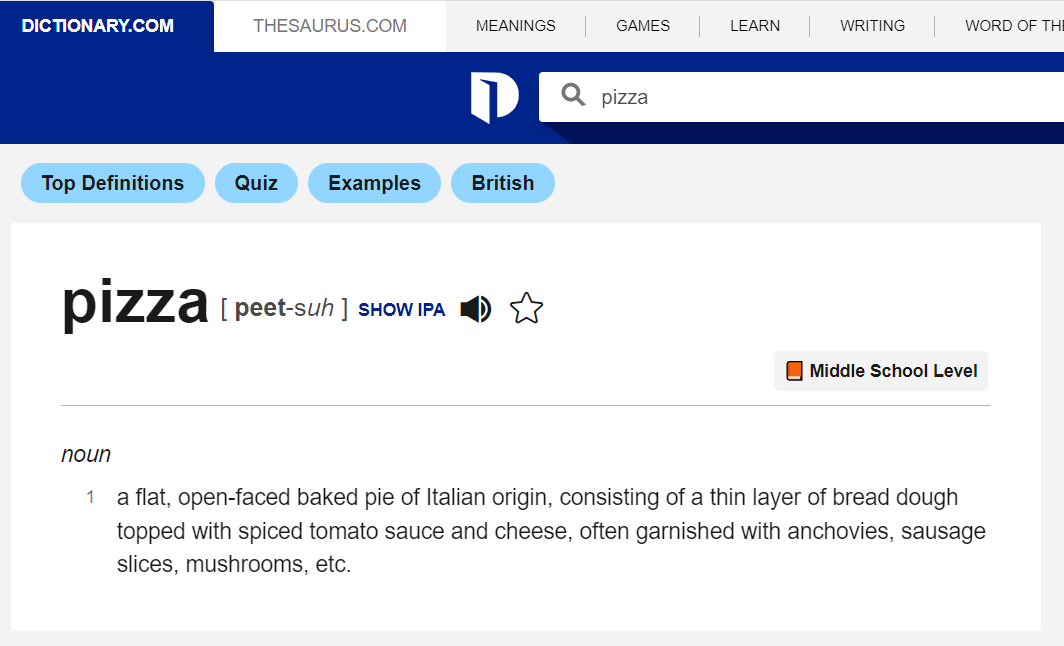

Luckily for us, there’s this simple, and yet comprehensive website named Dictionary.com. If we search for the term “pizza”, the webpage that is returned looks like this:

After trying to input a few different terms, it’s quite clear that no matter what we search for, we always end up on a similar looking result page. The next steps are then both simple and logical:

- Given that the url is:

https://www.dictionary.com/browse/pizza, we can simply change the search term “pizza” at the end of that string to whichever term that we want to search for, and get its corresponding results page. - We can then simply open the developer tools in our browser and inspect the html tags where the information that we are looking for is stored. We’ll make a mental note of the corresponding

classandidattributes and use them for our little scraping program.

Writing it should actually be pretty straightforward:

class WordMeaning:

def __init__(self):

self.data = {

"term": "",

"pos-tag": [],

"pronounciation": [],

"definition": []

}

def getContent(self,term):

url = f"https://www.dictionary.com/browse/{term}"

url_page = requests.get(url)

soup = BeautifulSoup(url_page.content, "html.parser")

return soup

def getData(self,term):

soup = self.getContent(term)

self.data["term"] = term

for term in soup.find_all("span", class_="luna-pos"):

self.data["pos-tag"].append(term.text.strip())

for term in soup.find_all("span", class_="pron-spell-content css-7iphl0 evh0tcl1"):

self.data["pronounciation"].append(term.text.replace("[","").replace("]","").strip())

for term in soup.find_all("span", class_="one-click-content css-nnyc96 e1q3nk1v1"):

self.data["definition"].append(term.text.strip())

def getDefinition(self,term):

parsed = self.getData(term)

for k,v in self.data.items():

if k == "term":

print(f"Term:\n\n\t{v.title()}")

if k == "pronounciation":

x = 1

print(f"\nPronounciation(s):\n")

for val in v:

print(f"\t{x}. {val}")

x+=1

if k == "pos-tag":

x = 1

print(f"\nPOS-tag(s):\n")

for val in v:

print(f"\t{x}. {val.upper()}")

x+=1

if k == "definition":

x = 1

print(f"\nDefinition(s):\n")

for val in v:

print(f"\t{x}. {val.capitalize()}")

x+=1

if __name__ == "__main__":

meaning = WordMeaning()

meaning.getDefinition("python")

What we just did is, I think, relatively straightfoward:

- We created a dictionary named

datato store the values that we wanted to retrieve. - We then sent an http request to the Dictionary.com website for the search term “python”, and pulled the html content from the corresponding search result page.

- Looping through that page, we retrieved the values that were sitting between some html tags, and stored them into our initial dictionary.

- Finally, we just arbitrarily complicated everything so that we could present our scraped results in a human-readable format. That part is, of course, entirely optional.

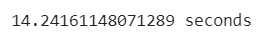

To be fair, we could easily stop there. After all, we managed to obtain the information that we wanted. Mission accomplished! But there’s one caveat though: it took approximately 3 seconds for the script to scrape some simple data from the Dictionary.com website and return all that information about the term that we were looking for. And all that time just for the single term “python”.

What if we had to run our script through a list of dozens, or hundreds of terms? How long would it take for our script to run? To better illustrate this potential issue, let’s consider the final sentence from Francis Scott Fitzgerald’s The Great Gatsby.

“So we beat on, boats against the current, borne back ceaselessly into the past.”

That’s an arguably short and simple string, with only 14 terms in total if we remove punctuation signs.

Let’s turn this sentence into a list of tokens, and see how long it takes for our script to run through that array. Actually, we’ll slightly modify our previous class object so that it only scrapes data but doesn’t output it:

sentence = ["So","we","beat","on","boats","against","the","current","borne","back","ceaselessly","into","the","past"]

data = {

"term": "",

"pos-tag": [],

"pronounciation": [],

"definition": []

}

start_time = time.time()

def getTimeToData(term):

url = f"https://www.dictionary.com/browse/{term}"

url_page = requests.get(url)

soup = BeautifulSoup(url_page.content, "html.parser")

data["term"] = term

for term in soup.find_all("span", class_="luna-pos"):

data["pos-tag"].append(term.text.strip())

for term in soup.find_all("span", class_="pron-spell-content css-7iphl0 evh0tcl1"):

data["pronounciation"].append(term.text.replace("[","").replace("]","").strip())

for term in soup.find_all("span", class_="one-click-content css-nnyc96 e1q3nk1v1"):

data["definition"].append(term.text.strip())

if __name__ == "__main__":

for s in sentence:

getTimeToData(s)

print("%s seconds" % (time.time() - start_time))

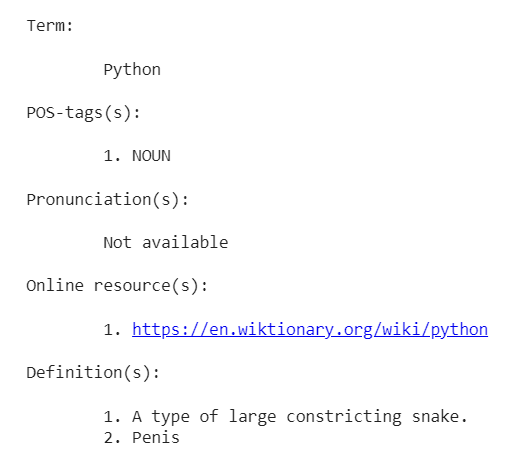

Wait, so, we had to sit in front of our program for almost 15 seconds while it was busy retrieving the content of a few html elements? Surely, there must be a more efficient way for us to collect all that information!

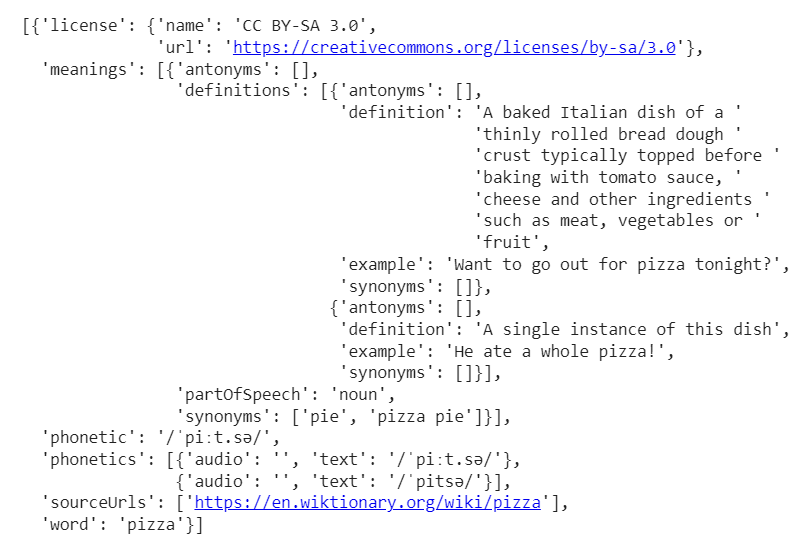

Free Dictionary API

The first API that we’ll be exploring today is totally free, though you can support its creator by donating some money here.

- Pros: Free, Relatively fast

- Cons: Some results are .. odd, English language only

To start using it, we simply need to import the requests library and append whichever term that we are looking for to the https://api.dictionaryapi.dev/api/v2/entries/en/ url:

import requests

def getFreeDictAPI(term):

terms = f"https://api.dictionaryapi.dev/api/v2/entries/en/{term}"

response_terms = requests.get(terms).json()

return response_terms

word = getFreeDictAPI("hot-dog")

print(word)

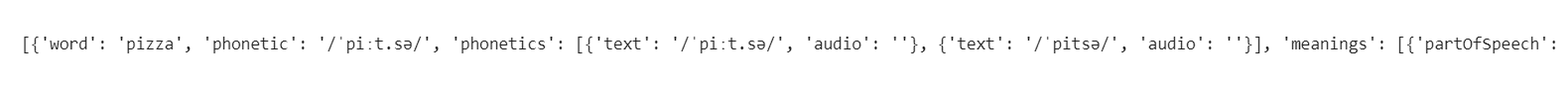

Ok, there seems to be a lot of nested data in there, but we can’t really see anything as we are just printing all that information on a single line. Luckily for us, Python has this great standard library named pprint that will help make any deeply nested data structure much easier to read:

import pprint

pprint.pprint(word)

That’s arguably much better, and as you can see, the Free Dictionary API has provided us with a lot more data than the Dictionary.com website did, and amongst many other things we can now get a link to the corresponding Wikipedia for that term, etc..

If we want to format the results so that they match the look and feel of our scraping function, all we have to do is write the following code:

import requests

import pprint

class WordMeaning:

def getFreeDictAPI(self,term):

terms = f"https://api.dictionaryapi.dev/api/v2/entries/en/{term}"

response_terms = requests.get(terms).json()

return response_terms

def getDefinition(self,term):

words = self.getFreeDictAPI(term)

for word in words[:1]:

a,b,c,d = 1,1,1,1

print(f"Term:\n\n\t{word['word'].capitalize()}")

print("\nPOS-tags(s):\n")

for w in word['meanings']:

print(f"\t{a}. {w['partOfSpeech'].upper()}")

a+=1

print("\nPronounciation(s):\n")

try:

for w in word["phonetics"]:

print(f"\t{b}. {w['text']}")

b+=1

except:

print("\tNot available")

print("\nOnline resource(s):\n")

for w in word["sourceUrls"]:

print(f"\t{c}. {w}")

c+=1

print("\nDefinition(s):\n")

for w in word["meanings"]:

for t in w["definitions"]:

print(f"\t{d}. {t['definition']}")

d+=1

if __name__ == "__main__":

meaning = WordMeaning()

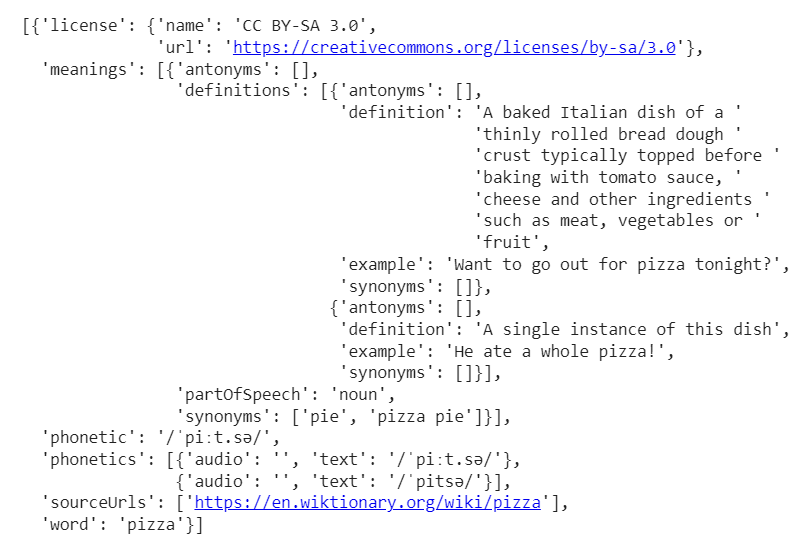

meaning.getDefinition("python")

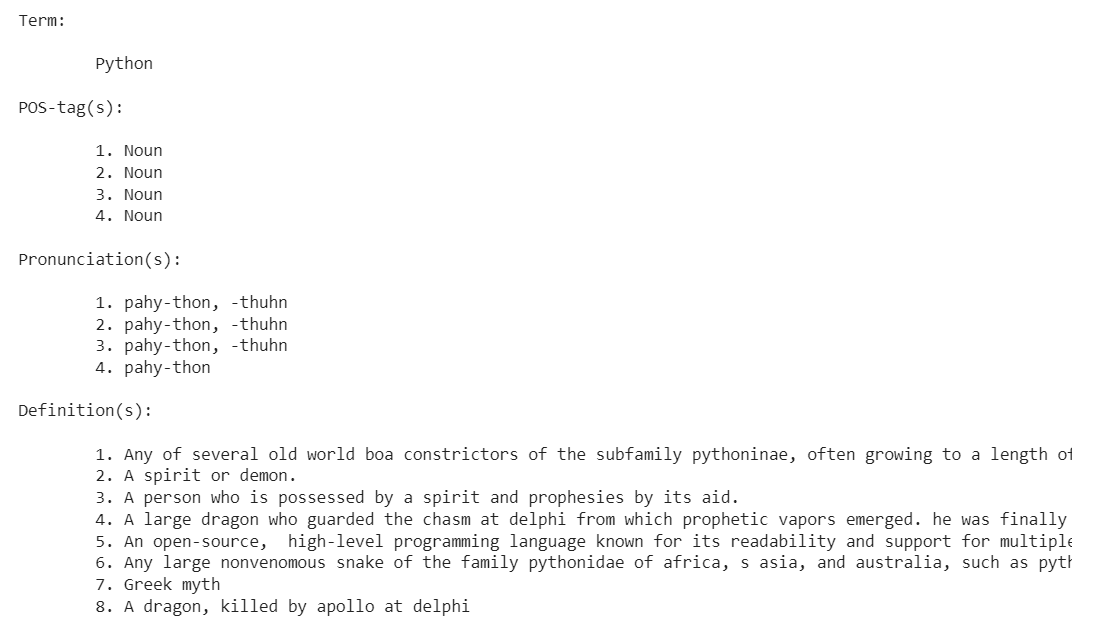

By the way, see that last defintion? Now this is why, dear readers, I mentioned earlier that this API could sometimes return “odd” results..

Our getDefinition() function being a perfect illustration of something we should never do in Python, aka multiple nested loops, we will simply measure the amount of time it takes the Free Dictionary API to retrieve and store the data:

sentence = ["So","we","beat","on","boats","against","the","current","borne","back","ceaselessly","into","the","past"]

data = []

start_time = time.time()

def getTimeToData(term):

terms = f"https://api.dictionaryapi.dev/api/v2/entries/en/{term}"

response_terms = requests.get(terms).json()

data.append(response_terms)

if __name__ == "__main__":

for s in sentence:

getTimeToData(s)

print("%s seconds" % (time.time() - start_time))

The Oxford Dictionary API

I won’t even try to pretend otherwise, the next API is my favourite of the three that we are reviewing today. Though it is also the one whose free version is the most limited, it offers support for many languages other than English (see the full list here) which as non-native English speaker is a big plus to me.

- Pros: Feature rich, Supports multiple languages

- Cons: Free version limited to 1,000 calls per day, Pricing for the pro version

This time we’ll need to create an account, which will in turn provide us with both an api id and a api key. Once we get these, we can fetch some data exactly like we did with the Free Dictionary API:

import requests

import json

import pprint

def getMeaning(lang,term):

app_id = "********"

app_key = "********"

language = lang

url = f"https://od-api.oxforddictionaries.com/api/v2/entries/{language}/{term.lower()}"

r = requests.get(url, headers = {"app_id" : app_id, "app_key" : app_key})

term = r.json()

return term

word = getMeaning("en","javascript")

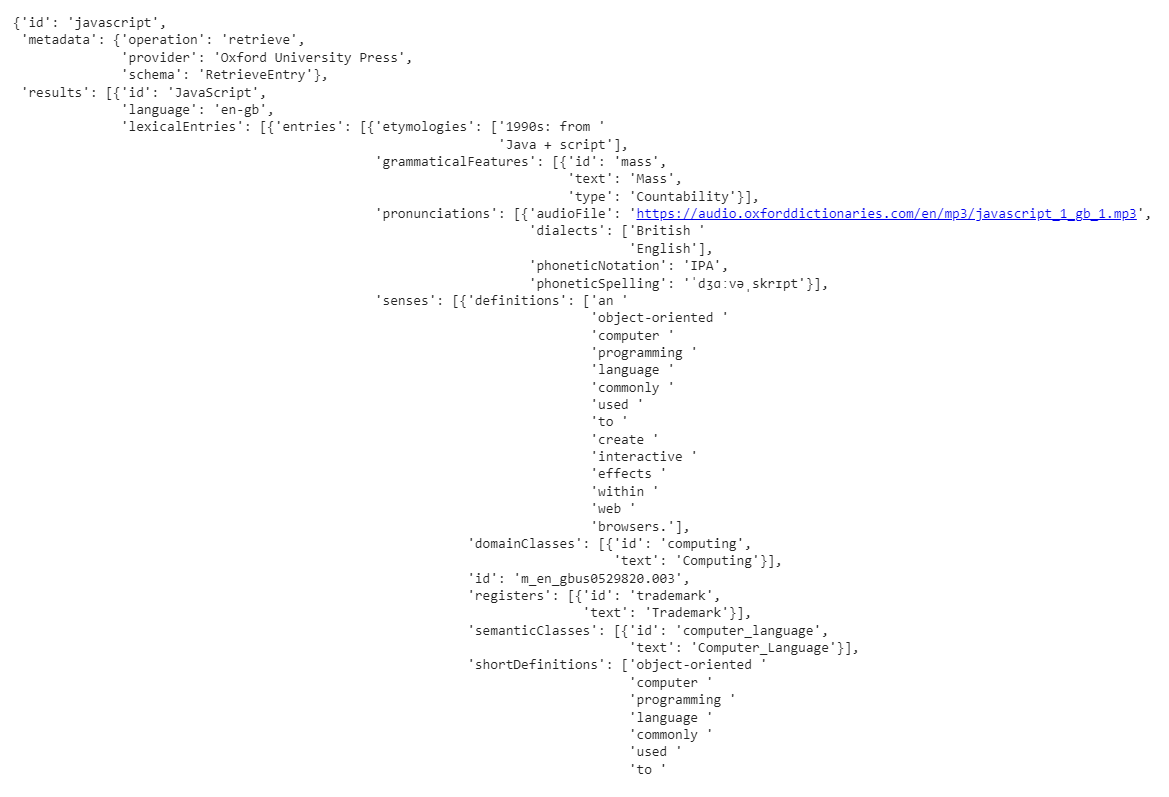

pprint.pprint(word)

Again, the returned JSON file shows an impressive range of features, such as audio files and contextual examples.

To switch from English to say, French, all we have to do is change the language variable to "fr":

import requests

import json

import pprint

def getMeaning(lang,term):

app_id = "********"

app_key = "********"

language = lang

url = f"https://od-api.oxforddictionaries.com/api/v2/entries/{language}/{term.lower()}"

r = requests.get(url, headers = {"app_id" : app_id, "app_key" : app_key})

term = r.json()

return term

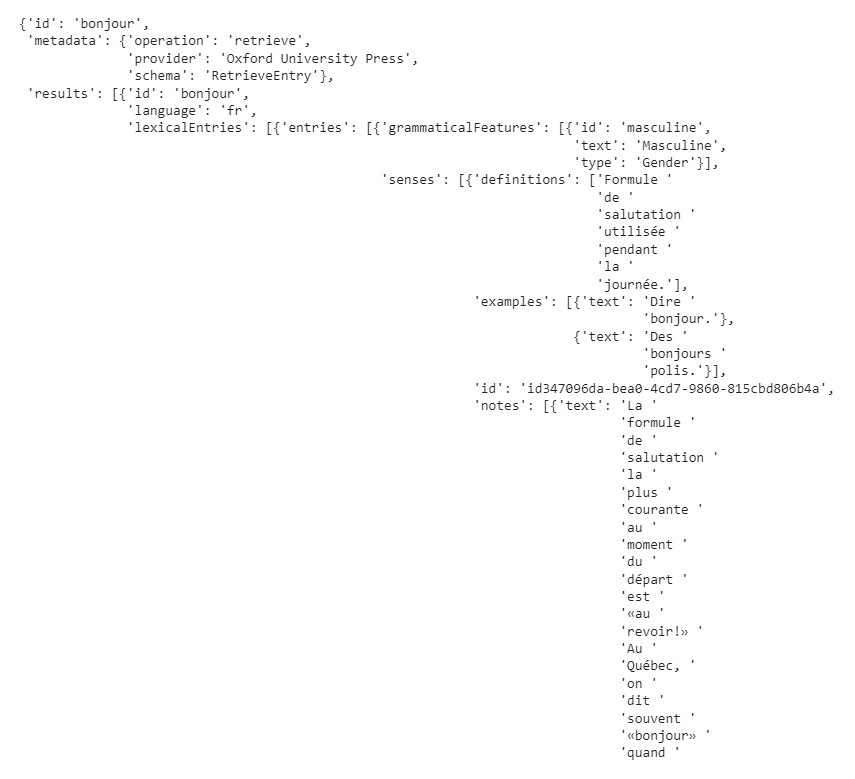

word = getMeaning("fr","bonjour")

pprint.pprint(word)

Anyway, let’s tweak our previous code so that it prints the information that we are looking for in a format that should now look familiar:

import requests

import json

import pprint

class WordMeaning:

def __init__(self):

self.app_id = "********"

self.app_key = "********"

def getMeaning(self,lang,term):

language = lang

url = f"https://od-api.oxforddictionaries.com/api/v2/entries/{language}/{term.lower()}"

r = requests.get(url, headers = {"app_id" : self.app_id, "app_key" : self.app_key})

term = r.json()

return term

def getDefinition(self,lang,term):

words = self.getMeaning(lang,term)

a,b,c = 1,1,1

for keys,word in words.items():

if keys == "results":

print(f'Term:\n\n\t{word[0]["id"].capitalize()}')

for w in word:

print("\nPOS-tag(s):\n")

for content in w["lexicalEntries"]:

print(f'\t{a}. {content["lexicalCategory"]["text"]}')

a+=1

print("\nPronunciation(s):\n")

for w in word[0]["lexicalEntries"][0]["entries"][0]["pronunciations"]:

print(f'\t{b}. {w["phoneticSpelling"]}')

print(f'\t{b+1}. {w["audioFile"]}')

for w in word:

print("\nDefinition(s):\n")

for content in w["lexicalEntries"]:

for cont in content["entries"][0]["senses"]:

print(f'\t{c}. {"".join(cont["definitions"]).capitalize()}')

c+=1

if __name__ == "__main__":

meaning = WordMeaning()

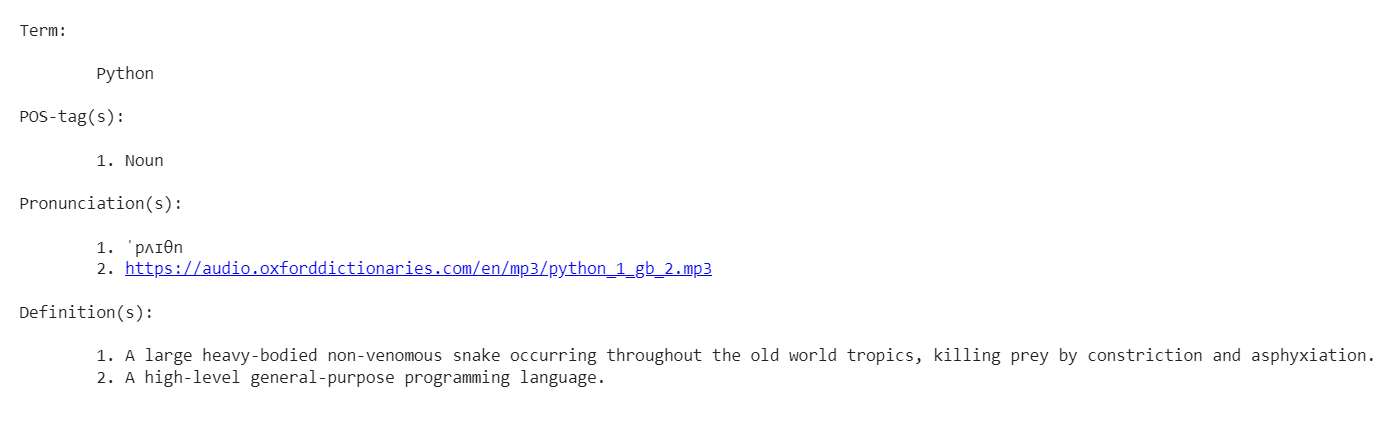

meaning.getDefinition("en","python")

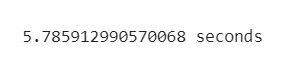

Again, to measure the performance of the API, we won’t be printing anything and will simply limit ourselves to retrieving and storing the data:

sentence = ["So","we","beat","on","boats","against","the","current","borne","back","ceaselessly","into","the","past"]

data = []

start_time = time.time()

def getTimeToData(term):

app_id = "********"

app_key = "********"

language = "en"

url = f"https://od-api.oxforddictionaries.com/api/v2/entries/{language}/{term.lower()}"

r = requests.get(url, headers = {"app_id" : app_id, "app_key" : app_key})

term = r.json()

data.append(term)

if __name__ == "__main__":

for s in sentence:

getTimeToData(s)

print("%s seconds" % (time.time() - start_time))

The Merriam-Webster Dictionary API

The third and last dictionary API that we will be reviewing today is Merriam-Webster’s. Though slightly less feature-rich than the Oxford Dictionary API, it however allows its users to generate keys that can be used across a full suite of products:

- Merriam-Webster’s Collegiate Dictionary with Audio

- Merriam-Webster’s Collegiate Thesaurus

- Merriam-Webster’s Spanish-English Dictionary with Audio

- Merriam-Webster’s Medical Dictionary with Audio

- Merriam-Webster’s Learner’s Dictionary with Audio

- Merriam-Webster’s Elementary Dictionary with Audio (Grades 3-5)

- Merriam-Webster’s Intermediate Dictionary with Audio (Grades 6-8)

- Merriam-Webster’s Intermediate Thesaurus (Grades 6-8)

- Merriam-Webster’s School Dictionary with Audio (Grades 9-11)

Besides, it probably has the best documentation of all the solutions reviewed in this article, with clear explanations and examples provided for each of its feature.

- Pros: Feature rich, Provides a lot of different dictionary APIs, Great documentation

- Cons: Free version limited to 1,000 calls per day, Pricing for the pro version

At this point we probably won’t need to go through what each of the following line does, as the code very much resembles what we already wrote earlier:

import requests

import json

import pprint

def getMeaning(term):

app_key = "********"

url = f"https://www.dictionaryapi.com/api/v3/references/collegiate/json/{term}?key={app_key}"

r = requests.get(url)

term = r.json()

return term

word = getMeaning("python")

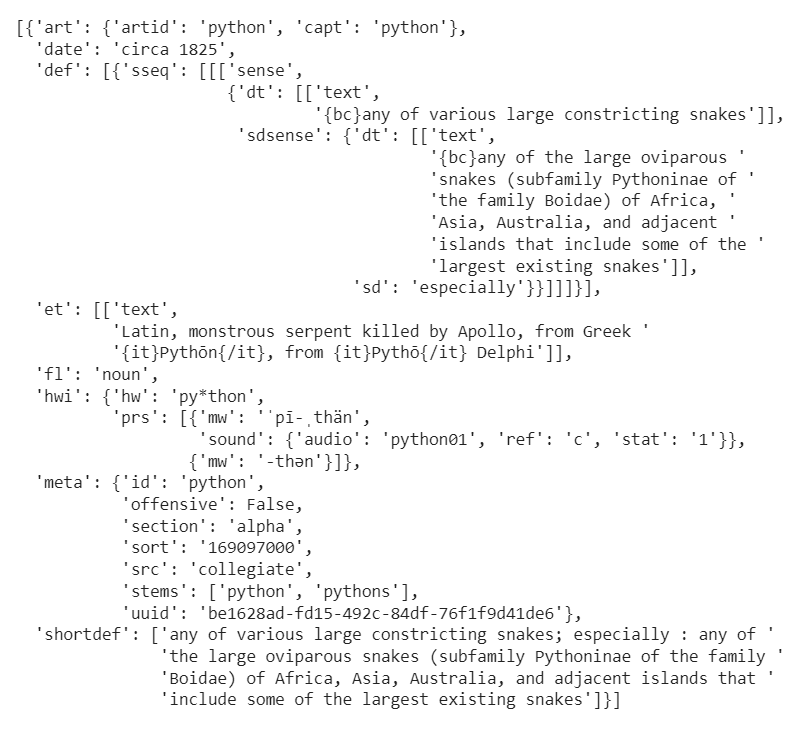

pprint.pprint(word)

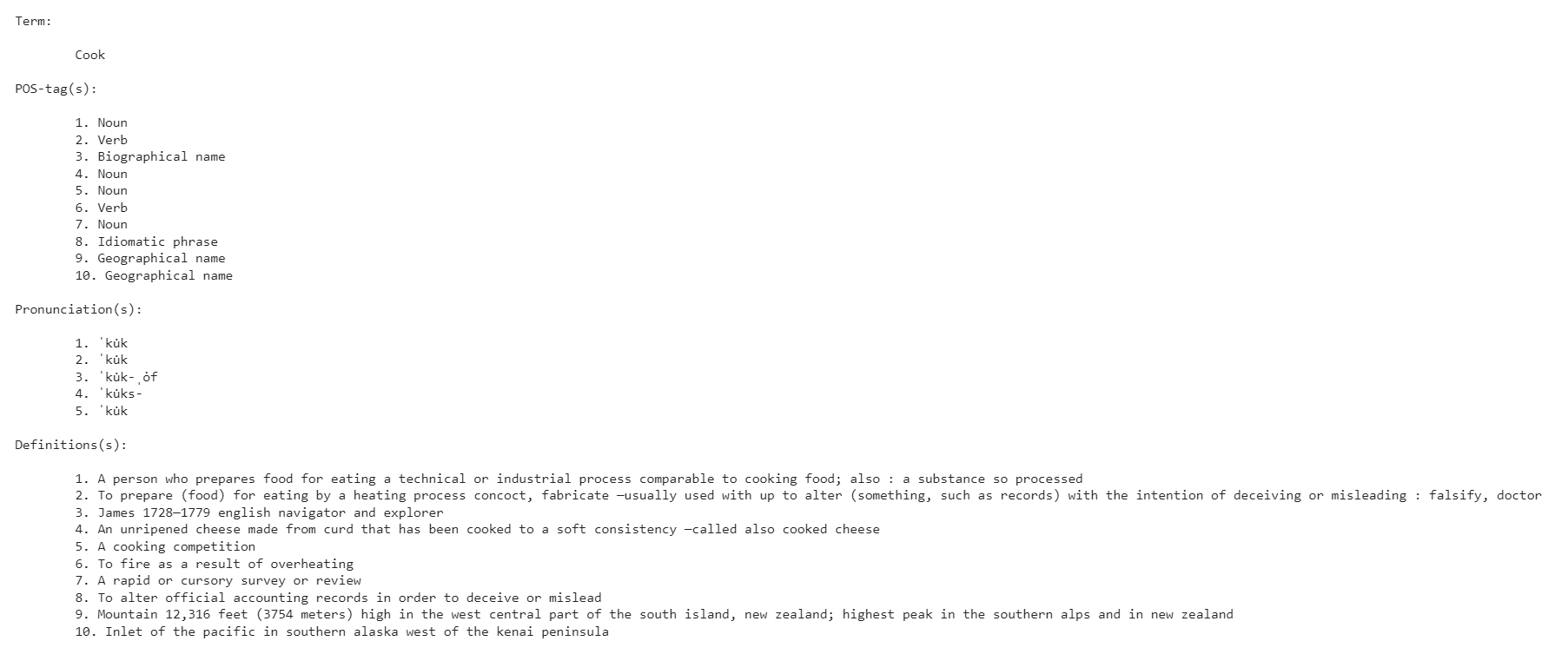

And for the last time today, we’ll be looping through the extracted data structure to try and print something that matches our previous attempts at coming up with a templated output format:

import requests

import json

import pprint

class WordMeaning:

def __init__(self):

self.app_key = "********"

def getMeaning(self,term):

url = f"https://www.dictionaryapi.com/api/v3/references/collegiate/json/{term}?key={self.app_key}"

r = requests.get(url)

term = r.json()

return term

def getDefinition(self,term):

words = self.getMeaning(term)

a,b,c = 1,1,1

print(f'Term:\n\n\t{term.capitalize()}')

print("\nPOS-tag(s):\n")

for word in words:

print(f'\t{a}. {word["fl"].capitalize()}')

a+=1

print("\nPronunciation(s):\n")

for word in words:

for w,v in word["hwi"].items():

if w == "prs":

print(f'\t{b}. {v[0]["mw"]}')

b+=1

print("\nDefinitions(s):\n")

for word in words:

print(f'\t{c}. {" ".join(word["shortdef"]).capitalize()}')

c+=1

if __name__ == "__main__":

meaning = WordMeaning()

meaning.getDefinition("python")

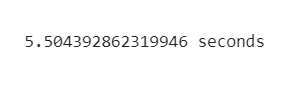

As expected, the peformance of the Merriam-Webster Dictionary API is very much in line with that of its competitors:

sentence = ["So","we","beat","on","boats","against","the","current","borne","back","ceaselessly","into","the","past"]

data = []

start_time = time.time()

def getTimeToData(term):

app_key = "b9a26a53-83de-4915-add1-c2478f888436"

url = f"https://www.dictionaryapi.com/api/v3/references/collegiate/json/{term}?key={self.app_key}"

r = requests.get(url)

term = r.json()

data.append(term)

if __name__ == "__main__":

for s in sentence:

getTimeToData(s)

print("%s seconds" % (time.time() - start_time))

Avoid like the plague: RapidAPI’s WordsAPI

On the one hand, from what I have read here and there the WordsAPI API seems to be very popular amongst the NLP community. But at the same time not only do I fail to see its real advantages over using products such as the Free Dictionary API, but I also have a serious problem with RapidAPI’s business model.

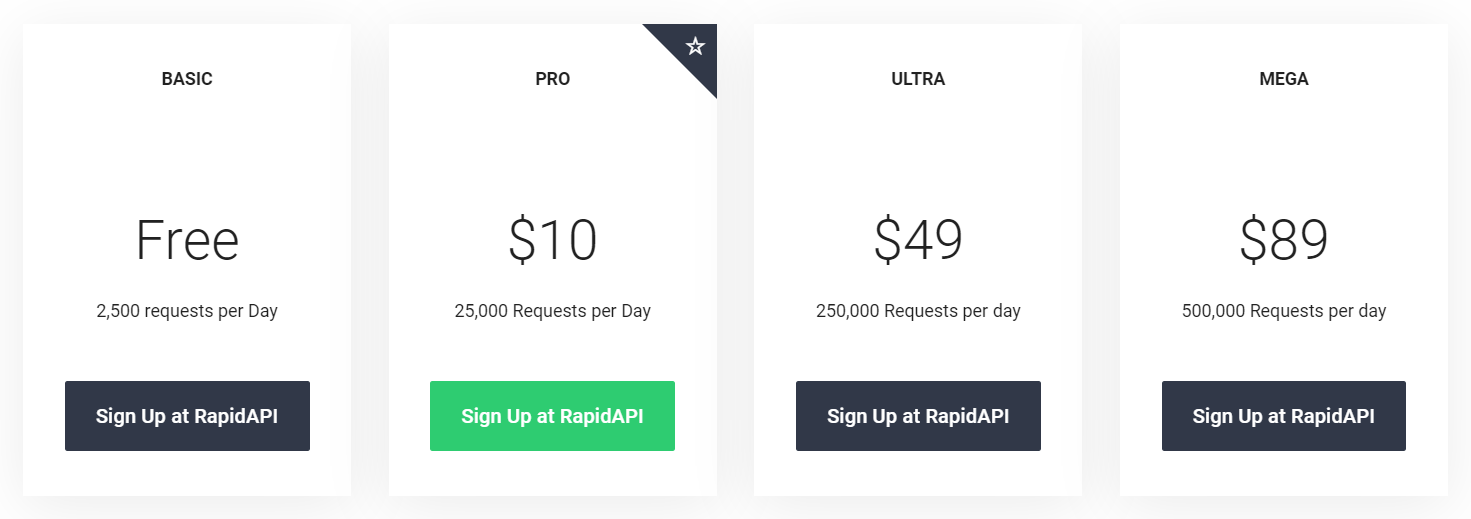

Now this is the part where we might disagree a little, as I’m really not a big fan of RapidAPI’s freemium system.

But wait, why do I keep mentioning RapidAPI? Well you see, though DPVentures (the developers behind WordsAPI) and RapidAPI are totally unrelated, the only way to use the former is to create a “free” account with the later.

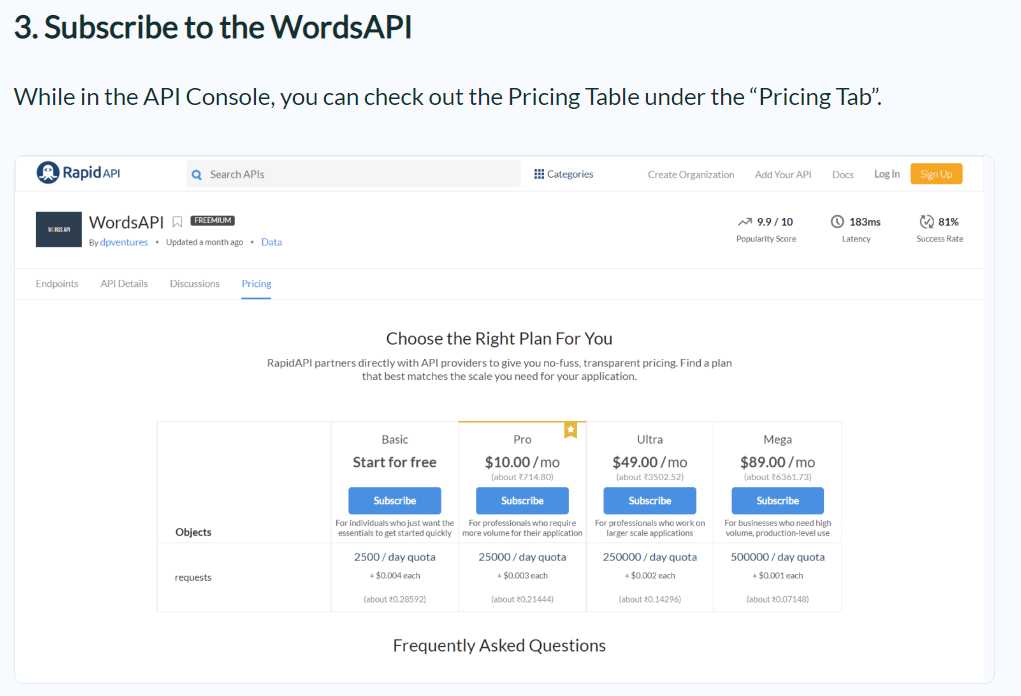

If this sounds confusing, let’s take a look at WordsAPI’s website and check out their pricing section:

Two things are important to note here:

- The API is advertised as being free

- We get confirmation that the only way to access WordsAPI’s data is to indeed create a RapidAPI account

That’s fine, let’s head over to RapidAPI’s website and sign up for a “free” account. Once we get our set of keys, we can start extracting some data:

import requests

def getMeaning(term):

headers = {

"X-RapidAPI-Key": "******",

"X-RapidAPI-Host": "******"

}

url = f"https://wordsapiv1.p.rapidapi.com/words/{term.lower()}"

r = requests.get(url, headers = headers)

term = r.json()

return term

getMeaning("python")

Oh wait, what’s this strange error message? Mmmh, why don’t we just go back to RapidAPI’s website, which conveniently provides a tutorial on how to get started with their products:

Ok, so it seems that we have to hit that “subscribe” button. No problem:

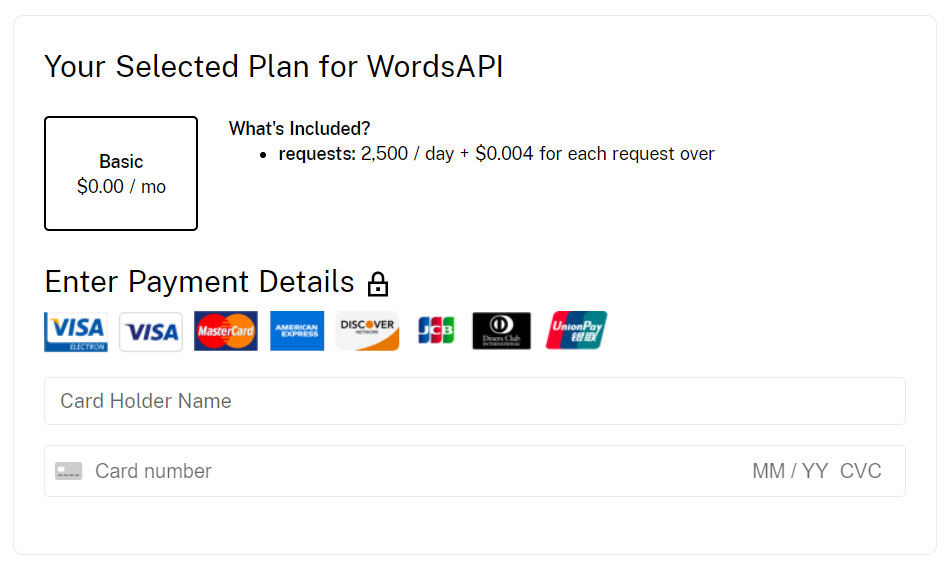

Now how about that? We are now required to enter our card details in order to use their “free” service. Don’t get me wrong, I very much understand that the talented people behind RapidAPI deserve to get paid for their hard work. I myself have a few premium accounts here and there, and I am for instance more than happy to be supporting the development of Playcode.

But here’s the subtlety, in case you missed it:

In other words, once we hit the 2.5k daily threshold, we will start getting charged for each extra request we make! And immediately, this raises quite a few questions:

- Do “free” account owners get warned when their daily amount of calls gets anywhere near the 2.5k threshold?

- Is there a limit as to how much money can be charged on a daily basis?

- Can developers who use the “free” version set up a limit on the daily volume of requests that can be made?

I personally find this business model to be plainly wrong, and I will never use any of RapidAPI’s “free” or paid services. In comparison, all the other APIs that we tested throughout this article only required an email address, and will simply stop working once their daily maximum threshold is reached.

Conclusion and next steps

You’ll find below a performance comparison between the different approaches that we took throughout this article. And please feel free to reach out to me if you decide to incorporate any of the aformentioned APIs into your personal projects!

| API / Technique | Speed in seconds | Free requests per day |

|---|---|---|

| Scraping | 14.2416 | N/A |

| Free Dictionary | 1.0339 | N/A |

| Oxford Dictionary | 5.7859 | 1,000 |

| Merriam-Webster | 5.5043 | 1,000 |